一、概述

MongoDB被业内称为最像关系型数据库的No-SQL数据库。

1.应用范围

MongoDB起源于地图类应用,用于存储位置信息;现多用于量级较大,访问频繁的大数据存储场景中。

2.逻辑结构

|

MongoDB中,库和表的存在感较弱;不需要提前创建,插入文档时相应的库和表会自动创建。

与MySQL不同,MongoDB中的库和表不携带任何属性,对于文档中的数据也没有严格的数据类型约束。所以MongoDB是一款无模式、无结构化,使用相对随意的数据库产品。 |

| MongoDB | MySQL |

|---|---|

| 库(Database) | 库(Database) |

| 集合(Collection) | 表(Table) |

| json文档(Document) | 数据行 |

MongoDB相较于MySQL,其事务处理能力上稍差一些,对于数据安全性保证上也相对较差(无从考究:因为大多数公司的核心业务数据仍存储在关系型数据库中;)。

二、MongoDB安装与配置

1.MongoDB安装要求

1.Redhat或Cent OS 6.2版本以上系统

2.系统开发包完整

3.ip地址和hosts文件解析正常

至少解析自身IP地址,否则搭建MongoDB环境时会受到影响

4.iptables防火墙和SElinux关闭

5.关闭大页内存机制

|

关闭大页内存(root用户下执行以下操作) 永久关闭(重启生效)

vim /etc/rc.local

if test -f /sys/kernel/mm/transparent_hugepage/enabled; then

echo never > /sys/kernel/mm/transparent_hugepage/enabled

fi

if test -f /sys/kernel/mm/transparent_hugepage/defrag; then

echo never > /sys/kernel/mm/transparent_hugepage/defrag

fi

临时关闭(即时生效,重启失效) echo never > /sys/kernel/mm/transparent_hugepage/enabled echo never > /sys/kernel/mm/transparent_hugepage/defrag 查看系统大页内存机制状态 cat /sys/kernel/mm/transparent_hugepage/enabled cat /sys/kernel/mm/transparent_hugepage/defrag |

其他操作系统关闭大页内存机制,请参照官方文档 MongoDB大页内存关闭指南

Redis应用时,也应该使用该方法关闭系统大页内存机制。

[root@db01 /tmp]# cd /etc/rc.d/

[root@db01 /etc/rc.d]# chmod +x rc.local

[root@db01 /etc/rc.d]# tail -6 rc.local

if test -f /sys/kernel/mm/transparent_hugepage/enabled;then

echo never > /sys/kernel/mm/transparent_hugepage/enabled

fi

if test -f /sys/kernel/mm/transparent_hugepage/defrag;then

echo never > /sys/kernel/mm/transparent_hugepage/defrag

fi

[root@db01 /etc/rc.d]# echo never > /sys/kernel/mm/transparent_hugepage/enabled

[root@db01 /etc/rc.d]# echo never > /sys/kernel/mm/transparent_hugepage/defrag

[root@db01 /etc/rc.d]# cat /sys/kernel/mm/transparent_hugepage/enabled

always madvise [never]

[root@db01 /etc/rc.d]# cat /sys/kernel/mm/transparent_hugepage/

defrag

always madvise [never]2. MongoDB安装

step 0 下载与上传

step 1 创建MongoDB系统管理用户

| MongoDB官方建议使用非root用户管理和使用MongoDB。 |

|

useradd mongod echo "密码"|passwd --stdin mongod |

[root@db01 /etc/rc.d]# useradd mongod

[root@db01 /etc/rc.d]# echo "mongod" |passwd --stdin mongod

Changing password for user mongod.

passwd: all authentication tokens updated successfully.

[root@db01 /etc/rc.d]# id mongod

uid=1001(mongod) gid=1001(mongod) groups=1001(mongod)step 2 解压软件并创建软连接

|

tar xf 安装包 -C /安装目录 ln -s /安装目录 /目录/mongoDB chown -R mongod.mongod /安装目录 |

[root@db01 /etc/rc.d]# mkdir /application && cd /application

[root@db01 /application]# tar xf /tmp/mongodb-linux-x86_64-rhel70-3.6.23.tgz -C .

[root@db01 /application]# ln -s mongodb-linux-x86_64-rhel70-3.6.23 mongodb

[root@db01 /application]# chown -R mongod. mongodb-linux-x86_64-rhel70-3.6.23

[root@db01 /application]# ll

total 0

lrwxrwxrwx 1 root root 34 Dec 8 10:39 mongodb -> mongodb-linux-x86_64-rhel70-3.6.23

drwxr-xr-x 3 mongod mongod 135 Dec 8 10:39 mongodb-linux-x86_64-rhel70-3.6.23step 3 配置本机域名解析

|

cat >>/etc/hosts<< EOF 主机名 IP地址 EOF |

[root@db01 /application]# sed -n 3p /etc/hosts

172.16.1.151 db01step 4 创建MongoDB所需目录并授权

|

mkdir -p /目录/mongodb/{conf,data,logs} chown -R mongod.mongod /目录/mongodb |

[root@db01 /application]# mkdir -p /data/mongodb/{conf,data,logs}

[root@db01 /application]# chown -R mongod. /data/mongodb

[root@db01 /application]# ll -d /data/mongodb/

drwxr-xr-x 5 mongod mongod 42 Dec 8 10:43 /data/mongodb/

[root@db01 /application]# tree /data/mongodb/

/data/mongodb/

├── conf

├── data

└── logs

3 directories, 0 filesstep 5 配置管理用户环境变量

|

su - mongod cat >>/home/mongod/.bash_profile<< EOF export PATH=/目录/mongodb/bin:$PATH EOF . /home/mongod/.bash_profile |

[root@db01 /application]# su - mongod

[mongod@db01 ~]$ cat >>~/.bash_profile<<EOF

> export PATH=/application/mongodb/bin:$PATH

> EOF

[mongod@db01 ~]$ . ~/.bash_profile step 6 测试

| mongo --version |

[mongod@db01 ~]$ mongo --version

MongoDB shell version v3.6.23

git version: d352e6a4764659e0d0350ce77279de3c1f243e5c

OpenSSL version: OpenSSL 1.0.1e-fips 11 Feb 2013

allocator: tcmalloc

modules: none

build environment:

distmod: rhel70

distarch: x86_64

target_arch: x86_643.配置文件(YAML模式)

YAML模式配置文件不支持tab键缩进;

|

#系统日志模块

systemLog:

destinaion: file

path: "/目录/文件名称" #日志存储位置

logAppend: true #日志以追加模式记录(默认以覆盖模式记录)

|

|

#数据存储模块

storage:

journal:

enabled: true

dbPath: "/目录/" #数据存放目录

|

|

#进程控制模块

processManagement:

fork: true #后端守护进程开启

pidFilePath: "/目录/文件名称" #指定pid文件位置(默认生成到数据目录中)

|

|

#网络配置模块

net:

bindIp: IP地址 #监听地址

port: 端口号 #监听端口(默认端口27017)

|

|

#安全验证模块

security:

authorization: enabled #开启登录账户认证功能

|

MongoDB从3.4版本以后不开启安全模块认证功能,不能远程访问MongoDB;但要切记,一定要设置好用户后(MongoDB默 认没有缺省用户),再向配置文件中添加安全验证模块等功能

systemLog:

destination: file

path: "/data/mongodb/logs/mongodb.log"

logAppend: true

storage:

journal:

enabled: true

dbPath: "/data/mongodb/data/"

processManagement:

fork: true

pidFilePath: "/data/mongodb/conf/mongodb.pid"

net:

bindIp: 10.0.0.151,127.0.0.1

port: 27017

security:

authorization: enabled[mongod@db01 ~]$ cd /data/mongodb/conf/

[mongod@db01 /data/mongodb/conf]$ cat mongodb.conf 修改配置文件后,应重启MongoDB,使其生效。

4.启动与关闭MongoDB实例

|

mongod -f 配置文件 #以指定配置文件启动MongoDB mongod -f 配置文件 --shutdown #关闭指定MongoDB实例 |

[mongod@db01 /data/mongodb/conf]$ mongod -f mongodb.conf

about to fork child process, waiting until server is ready for connections.

forked process: 7500

child process started successfully, parent exiting

[mongod@db01 /data/mongodb/conf]$ mongod -f mongodb.conf --shutdown

killing process with pid: 75005. 使用systemd管理MongoDB数据库

systemd管理MongoDB不能和其他管理方式一起使用;如使用命令启动MongoDB后,再使用systemd重启MongoDB会造成启动失败。

|

cat > /etc/systemd/system/mongod.service << EOF [Unit] Description=mongodb After=network.target remote-fs.target nss-lookup.target [Service] User=mongod Type=forking ExecStart=/目录/bin/mongod --config 配置文件 ExecReload=/bin/kill -s HUP $MAINPID ExecStop=/目录/bin/mongod --config 配置文件 --shutdown PrivateTmp=true [Install] WantedBy=multi-user.target EOF |

systemd管理方式仅限root使用

[Unit]

Description=mongodb

After=network.target remote-fs.target nss-lookup.target

[Service]

User=mongod

Type=forking

ExecStart=/application/mongodb/bin/mongod --config /data/mongodb/conf/mongodb.conf

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/application/mongodb/bin/mongod --config /data/mongodb/conf/mongodb.conf --shutdown

PrivateTmp=true

[Install]

WantedBy=multi-user.target[root@db01 /application]# cat /etc/systemd/system/mongod.service

[root@db01 /application]# netstat -lntup | grep [m]ongod

[root@db01 /application]# systemctl start mongod.service

[root@db01 /application]# netstat -lntup | grep [m]ongod

tcp 0 0 10.0.0.151:27017 0.0.0.0:* LISTEN 8163/mongod

[root@db01 /application]# systemctl stop mongod.service

[root@db01 /application]# netstat -lntup | grep [m]ongod三、MongoDB管理

1.基础管理

连接数据库

| 登录时,必须明确指定登录账户对应的验证库才能登录; |

| mongo -u 用户名 -p 登录密码 IP地址:端口号/验证库 |

[mongod@db01 ~]$ mongo -u root -p 123456 127.0.0.1:27017/admin

MongoDB shell version v3.6.23

connecting to: mongodb://127.0.0.1:27017/admin?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("70da1c21-c32a-4d3f-9504-815167cba3ef") }

MongoDB server version: 3.6.23

Server has startup warnings:

2021-12-09T10:57:07.639+0800 I CONTROL [initandlisten]

2021-12-09T10:57:07.639+0800 I CONTROL [initandlisten] ** WARNING: soft rlimits too low. rlimits set to 4096 processes, 65535 files. Number of processes should be at least 32767.5 : 0.5 times number of files.

>MongoDB默认有三个系统库,分别为admin、config和local库;此外还有一个隐藏的测试库teset,每次进入MongoDB时,默认进入test数据库。

| 数据库名称 | 作用 |

|---|---|

| admin | 系统预留库,MongoDB系统管理库 |

| local | 本地预留库,存储关键日志 |

| config | MongoDB配置信息库 |

| test | 登录时默认进入的库 |

2.数据库管理

查看数据库

|

show databases; show dbs; |

> show databases;

admin 0.000GB

config 0.000GB

local 0.000GB

> show dbs;

admin 0.000GB

config 0.000GB

local 0.000GB切换数据库

若库不存在,则自动创同名的临时建数据库;当临时库中存入集合或者json文档时,变为持久库。

| use 数据库名; |

> use test

switched to db test

> db

test

> use admin;

switched to db admin查看当前所在数据库

|

db; |

> db

admin查看集合

|

show tables; show collections; |

> show tables;

system.version

> show collections

system.version3.对象管理

数据库管理命令(Database)

|

db.[TAB键][TAB键] db.help() db.集合名称.[TAB键][TAB键] db.集合名称.db.help() DBQuery.shellBatchSize #查询显示窗口大小 DBQuery.shellBatchSize=数字 #设置显示窗口大小(默认分页显示,每页20行) |

- 库相关操作

|

use 数据库名 #进入数据库 db.dropDatabase() #删除当前库 |

> use young

switched to db young

> db.dropDatabase()

{ "ok" : 1 }- 集合相关操作

| db.createCollection('表名') #创建集合 |

> use young

switched to db young

> db.createCollection('test01')

{ "ok" : 1 }

> db.createCollection('test02')

{ "ok" : 1 }

> show collections

test01

test02

> show dbs;

admin 0.000GB

config 0.000GB

local 0.000GB

young 0.000GB- 文档相关操作(CRUD)

|

db.表名.insert({键1:值,键2:值...}) #插入数据 for(初始值;循环条件;变化){db.表名.insert({"键1":值,"键2":"值"...})} #批量插入数据 db.表名.count() #查询集合数据行数 db.表名.find() #全表查询 db.表名.find({键:值}) #条件查询 db.表名.find({键:值}).pretty() #以标准json格式显示数据 db.表名.remove({}) #清空集合中所有json文档 db.表名.totalSize() #查看集合存储信息(索引+数据压缩存储之后所占用的空间) |

> db.stu.insert({id:4924,name:"young",gender:"m",comments:"none"})

WriteResult({ "nInserted" : 1 })

> show collections;

stu

test01

test02

> db.stu.find()

{ "_id" : ObjectId("61b0680bc79da7b762639358"), "id" : 4924, "name" : "young", "gender" : "m", "comments" : "none" }

> db.stu.find().pretty()

{

"_id" : ObjectId("61b0680bc79da7b762639358"), #自动生成列(相当于主键),它是聚簇索引列

"id" : 4924,

"name" : "young",

"gender" : "m",

"comments" : "none"

}

> for(i=0;i<10000;i++){db.test.insert({"id":i,"event":"mongodb","instansID":27020,"date":new Date()})}

WriteResult({ "nInserted" : 1 })

> db.test.count();

10000

> db.test.find({id:2449}).pretty()

{

"_id" : ObjectId("61b069bbc79da7b762639cea"),

"id" : 2449,

"event" : "mongodb",

"instansID" : 27020,

"date" : ISODate("2021-12-08T08:15:55.664Z")

}

> db.test.find({"id":{$lt:7,$gt:4}})

{ "_id" : ObjectId("61b069bbc79da7b76263935e"), "id" : 5, "event" : "mongodb", "instansID" : 27020, "date" : ISODate("2021-12-08T08:15:55.137Z") }

{ "_id" : ObjectId("61b069bbc79da7b76263935f"), "id" : 6, "event" : "mongodb", "instansID" : 27020, "date" : ISODate("2021-12-08T08:15:55.138Z") }

> db.stu.remove({})

WriteResult({ "nRemoved" : 1 })

> db.test.totalSize()

372736

> DBQuery.shellBatchSize

20

> DBQuery.shellBatchSize=50

50复制集管理命令(Replication set)

|

rs.[TAB键][TAB键] rs.help() |

分片集群管理命令(Sharding Cluster)

|

sh.[TAB键][TAB键] sh.help() |

4.用户管理

| 建立用户时的当前所在库为创建用户的 验证库;在使用新建用户登录MongoDB时,要指定该用户对应的 验证库方可登录 |

|

用户管理规则 1.管理员账户须在admin库下创建; 2.创建用户的命令是在哪个库下执行的,那么该库就是该用户验证库; 3.通常管理员账户的验证库设为admin,普通用户一般将其所管理的库设为验证库; 4.实际使用中不建议使用test库作为用户验证库; 5.MongoDB 3.6版本及后续版本,不指定bindIp参数,默认不允许远程登录,只能使用本地管理员登录; |

创建用户

|

use 数据库

db.createUser( {

user: "用户名", #指定用户名

pwd: "明文密码", #设定用户登录密码

roles: [

{ role: "角色名", #指定用户角色:root/readWrite/read

db: "数据库名" }

| role: "角色名",

{ role: "角色名",

db: "数据库名" },

| role: "角色名",

...

]

}

) |

要使创建用户生效,需在配置文件中打开用户授权功能;

> use admin

switched to db admin

> db.createUser(

... {

... user: "root",

... pwd: "123456",

... roles: [

... { role: "root",

... db: "admin" }

... ]

... }

... )

Successfully added user: {

"user" : "root",

"roles" : [

{

"role" : "root",

"db" : "admin"

}

]

}

> use young;

switched to db young

> db.createUser(

... { user: "mongod", pwd: "mongod", roles: [{ role: "readWrite", db: "young" }, {role: "readWrite", db: "test"}] }

... )

Successfully added user: {

"user" : "mongod",

"roles" : [

{

"role" : "readWrite",

"db" : "young"

},

{

"role" : "readWrite",

"db" : "test"

}

]

}验证用户

| db.auth('用户名','密码') |

返回1既用户可用,返回0即用户不可用;

> use admin

switched to db admin

> db.auth("root","123456")

1

> db.auth("mongod","mongod")

Error: Authentication failed.

0

> use young

switched to db young

> db.auth("root","123456")

Error: Authentication failed.

0

> db.auth("mongod","mongod")

1查看用户

|

use admin db.system.users.find({}).pretty() |

> use admin

switched to db admin

> show tables;

system.users

system.version

> db.system.users.find().pretty()

{

"_id" : "admin.root",

"userId" : UUID("f4044887-9f93-4931-88eb-68ee4ba8d4c3"),

"user" : "root",

"db" : "admin",

......

}

{

"_id" : "young.mongod",

"userId" : UUID("18ca6e1c-3353-44c3-8a63-85ffce16cc60"),

"user" : "mongod",

"db" : "young",

......

}删除用户

|

use 验证库 db.dropUser("用户名") |

> db

admin

> db.dropUser("young")

false

> use young

switched to db young

> db.auth("young","young")

1

> ^C

bye

> use young

switched to db young

> db.dropUser("young")

true

> db.auth("young","young")

Error: Authentication failed.

0四、MongoDB复制集(Replication Set)

1.基本原理

MongoDB复制集的基本结构为1主2从,自带分布式数据一致性协议(Raft);如果发生主库宕机,复制集内部会进行投票选举,选择一个新的主库替代原有主库对外提供服务;与此同时,复制集会自动通知客户端程序,主库已经发生切换,应用会自动连接新的主库。

MySQL MGR采用的分布式数据一致性协议是Paxos。

特性

- 无需中间件,原生自带复制集;

- 自带分布式数据一致性协议:相互监控,才有投票机制进行数据一致性同步;

- 默认状态下,从库无法进行读写操作;

- 主节点会进行随机选举并切换

- 不支持级联复制集

2.部署复制集

单机多实例环境中,多实例内存占用总和不能超过物理机内存的80%

step 0 准备工作

[mongod@db01 ~]$ mkdir -p /data/mongodb/{30017,30018,30019,30020}/{data,conf,logs}

[mongod@db01 ~]$ tree /data/mongodb/

/data/mongodb/

├── 30017

│ ├── conf

│ ├── data

│ └── logs

├── 30018

│ ├── conf

│ ├── data

│ └── logs

├── 30019

│ ├── conf

│ ├── data

│ └── logs

└── 30020

├── conf

├── data

└── logs

16 directories, 0 files

[mongod@db01 ~]$ cd /data/mongodb/

[mongod@db01 /data/mongodb]$ step 1 准备配置文件

|

systemLog:

destinaion: file

path: "/目录/文件名称"

logAppend: true

storage:

journal:

enabled: true

dbPath: "/目录/"

directoryPerDB: true

#engine: wiredTiger

wiredTiger: #MongoDB 3.0以上版本默认引擎为wT

engineConfig:

cacheSizeGB: n #设置存储引擎缓冲区为 n GB(默认占用系统所有内存资源)

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib #开启数据压缩

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: "/目录/文件名称"

net:

bindIp: IP地址

port: 端口号

replication:

oplogSizeMB: m #设置操作日志(相当于MySQL中的二进制日志)的存储空间

replSetName: 复制集名称 #定义复制集名称

|

wiredTiger与InnoDB类似,支持事务、行级锁(文档锁)、聚簇索引等特性

#实例1

systemLog:

destination: file

path: "/data/mongodb/30017/logs/mongodb.log"

logAppend: true

storage:

journal:

enabled: true

dbPath: "/data/mongodb/30017/data/"

directoryPerDB: true

engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

pidFilePath: /data/mongodb/30017/conf/mongodb.pid

net:

port: 30017

bindIp: 10.0.0.151,127.0.0.1

replication:

oplogSizeMB: 2048

replSetName: young_repl#实例2

[mongod@db01 /data/mongodb]$ \cp -rp 30017/conf/mongodb.conf 30018/conf/

[mongod@db01 /data/mongodb]$ sed -i s#30017#30018#g 30018/conf/mongodb.conf #实例3

[mongod@db01 /data/mongodb]$ \cp -rp 30017/conf/mongodb.conf 30019/conf/

[mongod@db01 /data/mongodb]$ sed -i s#30017#30019#g 30019/conf/mongodb.conf #实例4

[mongod@db01 /data/mongodb]$ \cp -rp 30017/conf/mongodb.conf 30020/conf/

[mongod@db01 /data/mongodb]$ sed -i s#30017#30020#g 30020/conf/mongodb.conf step 2 启动多实例

| mongod -f 配置文件 |

[mongod@db01 /data/mongodb]$ for i in {30017..30020};do mongod -f $i/conf/mongodb.conf;done

about to fork child process, waiting until server is ready for connections.

forked process: 7830

child process started successfully, parent exiting

about to fork child process, waiting until server is ready for connections.

forked process: 7863

child process started successfully, parent exiting

about to fork child process, waiting until server is ready for connections.

forked process: 7892

child process started successfully, parent exiting

about to fork child process, waiting until server is ready for connections.

forked process: 7921

child process started successfully, parent exiting

[mongod@db01 /data/mongodb]$ netstat -lntup | grep 300

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

tcp 0 0 127.0.0.1:30017 0.0.0.0:* LISTEN 7830/mongod

tcp 0 0 10.0.0.151:30017 0.0.0.0:* LISTEN 7830/mongod

tcp 0 0 127.0.0.1:30018 0.0.0.0:* LISTEN 7863/mongod

tcp 0 0 10.0.0.151:30018 0.0.0.0:* LISTEN 7863/mongod

tcp 0 0 127.0.0.1:30019 0.0.0.0:* LISTEN 7892/mongod

tcp 0 0 10.0.0.151:30019 0.0.0.0:* LISTEN 7892/mongod

tcp 0 0 127.0.0.1:30020 0.0.0.0:* LISTEN 7921/mongod

tcp 0 0 10.0.0.151:30020 0.0.0.0:* LISTEN 7921/mongod step 3 构建基础主从复制集

|

mongo --port 端口号 admin

config = {_id: '复制集名称', members: [

{_id: 0, host: '实例IP地址:端口号'},

{_id: 1, host: '实例IP地址:端口号'},

{_id: 2, host: '实例IP地址:端口号'},

......

{_id: n, host: '实例IP地址:端口号'}]

}

rs.initiate(config) |

[mongod@db01 /data/mongodb]$ mongo --port 30017 admin

MongoDB shell version v3.6.23

connecting to: mongodb://127.0.0.1:30017/admin?gssapiServiceName=mongodb

......

> config = {_id: 'young_repl', members:[

... {_id: 0, host: '10.0.0.151:30017'},

... {_id: 1, host: '10.0.0.151:30018'},

... {_id: 2, host: '10.0.0.151:30019'}, ]

... }

{

"_id" : "young_repl",

"members" : [

{

"_id" : 0,

"host" : "10.0.0.151:30017"

},

{

"_id" : 1,

"host" : "10.0.0.151:30018"

},

{

"_id" : 2,

"host" : "10.0.0.151:30019"

}

]

}

> rs.initiate(config)

{

"ok" : 1,

"operationTime" : Timestamp(1639036960, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1639036960, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

young_repl:SECONDARY> step 4 查询复制集状态

| rs.status() |

young_repl:SECONDARY> rs.status()

{

"set" : "young_repl",

"date" : ISODate("2021-12-09T08:05:36.534Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

......

}

young_repl:PRIMARY> 故障模拟

- 节点宕机

| mongod -f 配置文件 --shutdown #关闭指定MongoDB实例 |

[mongod@db01 /data/mongodb]$ mongod -f 30017/conf/mongodb.conf --shutdown

killing process with pid: 7830

[mongod@db01 /data/mongodb]$ mongo --port 30018

MongoDB shell version v3.6.23

connecting to: mongodb://127.0.0.1:30018/?gssapiServiceName=mongodb

......

young_repl:PRIMARY>

young_repl:PRIMARY> ^C

bye- 节点恢复

| mongod -f 配置文件 #以指定配置文件启动MongoDB |

[mongod@db01 /data/mongodb]$ mongo --port 30017

MongoDB shell version v3.6.23

connecting to: mongodb://127.0.0.1:30017/?gssapiServiceName=mongodb

......

young_repl:SECONDARY> 3.特殊节点

仲裁节点(Arbiter)

仲裁节点:主要负责在复制集环境选取主节点过程中的投票,但不存储任何数据,也不提供任何服务。

|

构建Arbiter节点主从复制集

mongod --port 端口号 admin

config = {_id: '复制集名称', members: [

{_id: 0, host: '实例IP地址:端口号'},

{_id: 1, host: '实例IP地址:端口号'},

{_id: 2, host: '实例IP地址:端口号',"arbiterOnly":true}]

}

rs.initiate(config) |

隐藏节点(Hidden)

隐藏节点:不参与选主,也不对外提供任何服务。一般用于数据统计和分析应用。

延时节点(Delay)

延时节点:类似于MySQL中的延时从库,数据落后于主库一段时间;因为数据不是实时同步,不应该提供服务或参与选主,因此通常与Hidden节点配合使用。

|

设置Hidden+Delay节点 cfg=rs.conf(); cfg.members[节点序号].priority=0; #设置指定节点优先级为0,不参与选主 cfg.members[节点序号].hidden=true; #设置指定节点为隐藏节点 cfg.members[节点序号].slaveDelay=m; #设置指定节点为延时节点,延时时间为m秒 rs.reconfig(cfg); 取消Hidden+Delay节点 查询Hidden+Delay节点 |

# 设置Hidden+Delay节点

young_repl:PRIMARY> cfg=rs.conf()

{

"_id" : "young_repl",

"version" : 7,

"protocolVersion" : NumberLong(1),

"members" : [

......

}

young_repl:PRIMARY> cfg.members[2].priority=0;

0

young_repl:PRIMARY> cfg.members[2].hidden=true;

true

young_repl:PRIMARY> cfg.members[2].slaveDelay=3600;

3600

young_repl:PRIMARY> rs.reconfig(cfg)

{

"ok" : 1,

"operationTime" : Timestamp(1639104760, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1639104760, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

# 查询Hidden+Delay节点

young_repl:PRIMARY> rs.conf()

{

"_id" : "young_repl",

"version" : 8,

"protocolVersion" : NumberLong(1),

"members" : [

{

"_id" : 1,

"host" : "10.0.0.151:30018",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "10.0.0.151:30017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 3,

"host" : "10.0.0.151:30019",

"arbiterOnly" : true,

"buildIndexes" : true,

"hidden" : true,

"priority" : 0,

"tags" : {

},

"slaveDelay" : NumberLong(3600),

"votes" : 1

},

{

"_id" : 4,

"host" : "10.0.0.151:30020",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

......

}

# 取消Hidden+Delay节点

young_repl:PRIMARY> cfg=rs.conf()

{

"_id" : "young_repl",

......

}

young_repl:PRIMARY> cfg.members[2].slaveDelay=0;

0

young_repl:PRIMARY> cfg.members[2].hidden=false;

false

young_repl:PRIMARY> cfg.members[2].priority=1;

1

young_repl:PRIMARY> rs.reconfig(cfg)

{

"ok" : 1,

"operationTime" : Timestamp(1639104950, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1639104950, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}4.复制集管理

查看复制集状态

|

rs.status(); #查看复制集整体状态 rs.isMaster(); #查看当前节点是否是主节点 rs.conf() #查看复制集配置信息 |

young_repl:SECONDARY> rs.isMaster()

{

"hosts" : [

"10.0.0.151:30017",

"10.0.0.151:30018",

"10.0.0.151:30019"

],

"setName" : "young_repl",

"setVersion" : 2,

"ismaster" : false,

"secondary" : true,

"primary" : "10.0.0.151:30018",

"me" : "10.0.0.151:30017",

......

young_repl:SECONDARY> rs.conf()

{

"_id" : "young_repl",

"version" : 2,

"protocolVersion" : NumberLong(1),

"members" : [

{

"_id" : 0,

"host" : "10.0.0.151:30017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "10.0.0.151:30018",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "10.0.0.151:30019",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],管理节点

|

rs.remove("IP地址:端口号"); #删除指定节点 rs.add("IP地址:端口号"); #添加指定节点 rs.addArb("IP地址:端口号"); #添加仲裁节点 |

添加Arbiter节点,需要将节点重启

young_repl:PRIMARY> rs.remove("10.0.0.151:30019")

{

"ok" : 1,

......

}

young_repl:PRIMARY> rs.remove("10.0.0.151:30017")

{

"ok" : 1,

......

}

young_repl:PRIMARY> rs.isMaster()

{

"hosts" : [

"10.0.0.151:30018"

],

......

young_repl:PRIMARY> rs.add("10.0.0.151:30017")

{

"ok" : 1,

......

}

young_repl:PRIMARY> rs.isMaster()

{

"hosts" : [

"10.0.0.151:30018",

"10.0.0.151:30017"

],

......

young_repl:PRIMARY> rs.addArb("10.0.0.151:30019")

{

"ok" : 1,

......

}

young_repl:PRIMARY> rs.isMaster()

{

"hosts" : [

"10.0.0.151:30018",

"10.0.0.151:30017"

],

"arbiters" : [

"10.0.0.151:30019"

],

......

young_repl:PRIMARY> ^C

bye

[mongod@db01 /data/mongodb]$ netstat -lntup | grep 30019

(Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

[mongod@db01 /data/mongodb]$ mongod -f 30019/conf/mongodb.conf

about to fork child process, waiting until server is ready for connections.

forked process: 8787

child process started successfully, parent exiting五、MongoDB Sharding Cluster部署

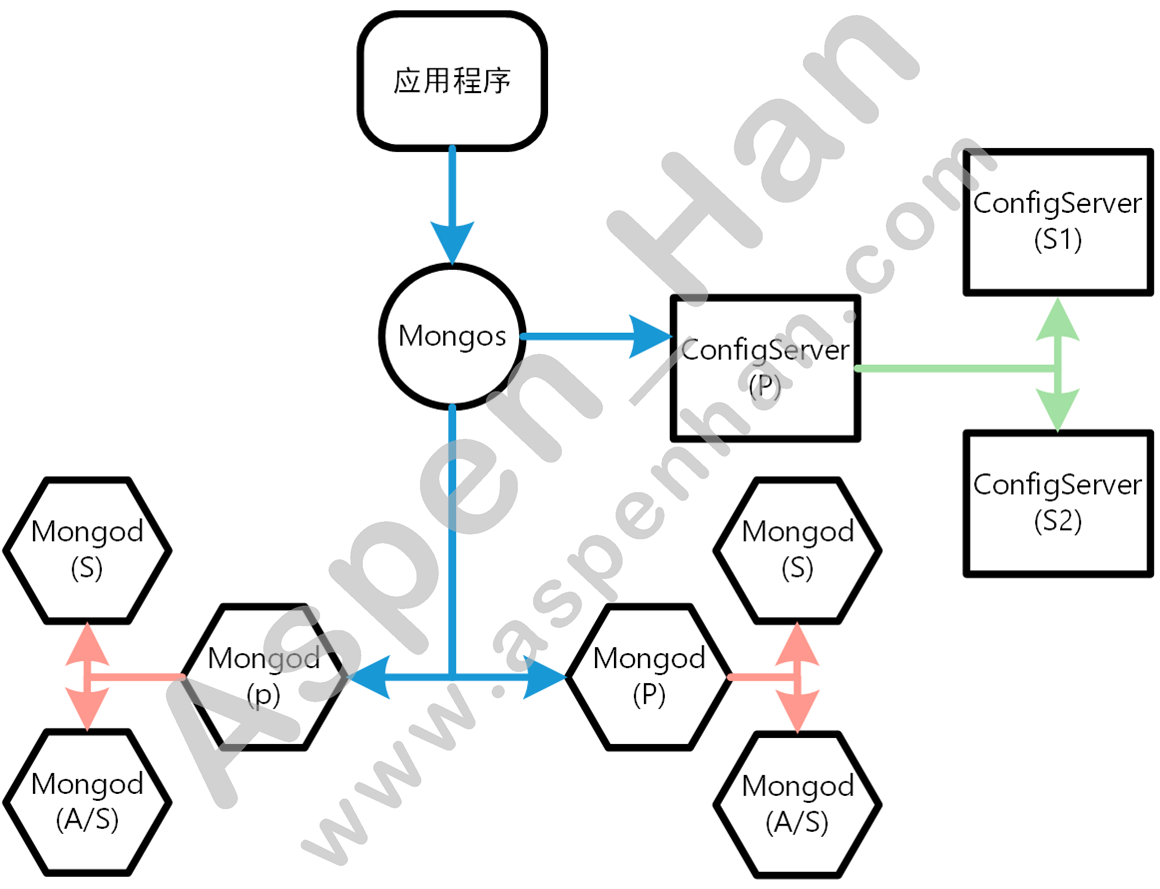

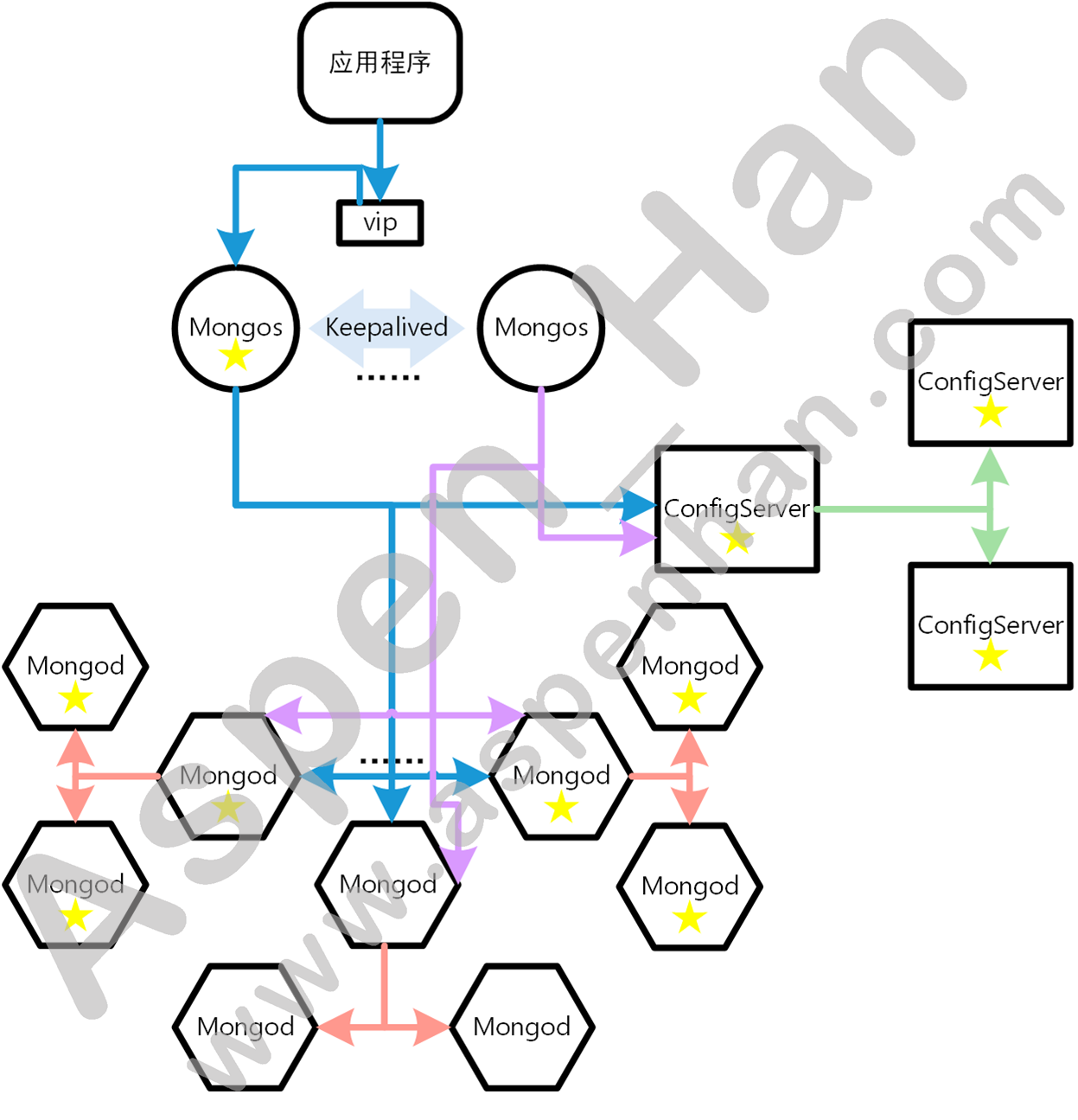

1.基础机构

MongoDB Sharding Cluster至少由10个实例构成

|

Mongos: 负责对外提供服务,是一个无状态的节点; ConfigServer: 存储集群分片策略以及后端存储节点信息;(必须是一主两从的结构) Mongod: 存储节点; |

MongoDB Sharding Cluster逻辑结构

2.部署存储节点

step 1 创建所需目录

|

mkdir -p /目录/mongodb/端口号/{conf,data,logs} chown -R mongod.mongod /目录/mongodb |

#主机1

[root@db01 ~]# ll /application/

total 0

lrwxrwxrwx 1 root root 34 Dec 8 10:39 mongodb -> mongodb-linux-x86_64-rhel70-3.6.23

drwxr-xr-x 3 mongod mongod 135 Dec 8 10:39 mongodb-linux-x86_64-rhel70-3.6.23

[root@db01 ~]# mkdir -p /data/mongodb/{30019,30020}/{conf,data,logs}

[root@db01 ~]# chown -R mongod. /data/mongodb

[root@db01 ~]# ll /data/mongodb -d

drwxr-xr-x 4 mongod mongod 32 Dec 10 14:30 /data/mongodb

[root@db01 ~]# tree /data/mongodb

/data/mongodb

├── 30019

│ ├── conf

│ ├── data

│ └── logs

└── 30020

├── conf

├── data

└── logs

8 directories, 0 files#主机2

[root@db02 /application]# ll /application/

total 0

lrwxrwxrwx 1 root root 47 Dec 10 14:24 mongodb -> /application/mongodb-linux-x86_64-rhel70-3.6.23

drwxr-xr-x 3 mongod mongod 135 Dec 10 14:22 mongodb-linux-x86_64-rhel70-3.6.23

[root@db02 /application]# mkdir -p /data/mongodb/{30019,30020}/{conf,data,logs}

[root@db02 /application]# chown -R mongod. /data/mongodb

[root@db02 /application]# ll -d /data/mongodb

drwxr-xr-x 4 mongod mongod 32 Dec 10 14:32 /data/mongodb

[root@db02 /application]# tree /data/mongodb

/data/mongodb

├── 30019

│ ├── conf

│ ├── data

│ └── logs

└── 30020

├── conf

├── data

└── logs

8 directories, 0 files#主机3

[root@db03 /application]# ll /application/

total 0

lrwxrwxrwx 1 root root 47 Dec 10 14:23 mongodb -> /application/mongodb-linux-x86_64-rhel70-3.6.23

drwxr-xr-x 3 mongod mongod 135 Dec 10 14:22 mongodb-linux-x86_64-rhel70-3.6.23

[root@db03 /application]# mkdir -p /data/mongodb/{30019,30020}/{conf,data,logs}

[root@db03 /application]# chown -R mongod. /data/mongodb

[root@db03 /application]# ll -d /data/mongodb

drwxr-xr-x 4 mongod mongod 32 Dec 10 14:34 /data/mongodb

[root@db03 /application]# tree /data/mongodb

/data/mongodb

├── 30019

│ ├── conf

│ ├── data

│ └── logs

└── 30020

├── conf

├── data

└── logs

8 directories, 0 filesstep 2 准备配置文件

|

systemLog:

destinaion: file

path: "/目录/文件名称"

logAppend: true

storage:

journal:

enabled: true

dbPath: "/目录"

directoryPerDB: true

#engine: wiredTiger

wiredTiger: #MongoDB 3.0以上版本默认引擎为wT

engineConfig:

cacheSizeGB: n #设置存储引擎缓冲区为 n GB(默认占用系统所有内存资源)

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib #开启数据压缩

indexConfig:

prefixCompression: true

net:

bindIp: IP地址

port: 端口号

replication:

oplogSizeMB: m #设置操作日志(相当于MySQL中的二进制日志)的存储空间

replSetName: 复制集名称 #定义复制集名称

sharding:

clusterRole: shardsvr #指定集群角色为数据节点

processManagement:

fork: true

pidFilePath: "/目录/文件名称"

|

#配置文件模板

systemLog:

destination: file

path: "/data/mongodb/30019/logs/mongodb.log"

logAppend: true

storage:

journal:

enabled: true

dbPath: "/data/mongodb/30019/data/"

directoryPerDB: true

engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

net:

bindIp: 10.0.0.151,127.0.0.1

port: 30019

replication:

oplogSizeMB: 2048

replSetName: sh1

sharding:

clusterRole: shardsvr

processManagement:

fork: true

pidFilePath: "/data/mongodb/30019/conf/mongodb.pid"#主机1

[root@db01 ~]# su - mongod

st login: Fri Dec 10 14:16:57 CST 2021 on pts/0

[mongod@db01 ~]$ ls /data/mongodb/30019/conf/mongodb.conf -l

-rw-r--r-- 1 mongod mongod 620 Dec 10 14:45 /data/mongodb/30019/conf/mongodb.conf

[mongod@db01 ~]$ cd /data/mongodb/

[mongod@db01 /data/mongodb]$ cp -rp 30019/conf/mongodb.conf 30020/conf/

[mongod@db01 /data/mongodb]$ ll 30020/conf/mongodb.conf

-rw-r--r-- 1 mongod mongod 620 Dec 10 14:45 30020/conf/mongodb.conf

[mongod@db01 /data/mongodb]$ sed -i s#30019#30020#g 30020/conf/mongodb.conf

[mongod@db01 /data/mongodb]$ sed -i s#sh1#sh2#g 30020/conf/mongodb.conf #主机2

[root@db02 ~]# su - mongod

Last login: Fri Dec 10 14:51:50 CST 2021 on pts/0

[mongod@db02 ~]$ cd /data/mongodb/

[mongod@db02 /data/mongodb]$ scp -rp root@10.0.0.151:/data/mongodb/30019/conf/mongodb.conf 30019/conf/

root@10.0.0.151's password:

mongodb.conf 100% 620 771.8KB/s 00:00

[mongod@db02 /data/mongodb]$ scp -rp root@10.0.0.151:/data/mongodb/30020/conf/mongodb.conf 30020/conf/

root@10.0.0.151's password:

mongodb.conf 100% 620 781.6KB/s 00:00

[mongod@db02 /data/mongodb]$ ll 30019/conf/mongodb.conf 30020/conf/mongodb.conf

-rw-r--r-- 1 mongod mongod 620 Dec 10 14:45 30019/conf/mongodb.conf

-rw-r--r-- 1 mongod mongod 620 Dec 10 14:50 30020/conf/mongodb.conf

[mongod@db02 /data/mongodb]$ sed -i s#10.0.0.151#10.0.0.152#g 30019/conf/mongodb.conf

[mongod@db02 /data/mongodb]$ sed -i s#10.0.0.151#10.0.0.152#g 30020/conf/mongodb.conf #主机3

[root@db03 ~]# su - mongod

Last login: Fri Dec 10 14:27:39 CST 2021 on pts/0

[mongod@db03 ~]$ cd /data/mongodb/

[mongod@db03 /data/mongodb]$ scp -rp root@10.0.0.151:/data/mongodb/30019/conf/mongodb.conf 30019/conf/

root@10.0.0.151's password:

mongodb.conf 100% 620 788.4KB/s 00:00

[mongod@db03 /data/mongodb]$ scp -rp root@10.0.0.151:/data/mongodb/30020/conf/mongodb.conf 30020/conf/

root@10.0.0.151's password:

mongodb.conf 100% 620 727.0KB/s 00:00

[mongod@db03 /data/mongodb]$ ll 30019/conf/mongodb.conf 30020/conf/mongodb.conf

-rw-r--r-- 1 mongod mongod 620 Dec 10 14:45 30019/conf/mongodb.conf

-rw-r--r-- 1 mongod mongod 620 Dec 10 14:50 30020/conf/mongodb.conf

[mongod@db03 /data/mongodb]$ sed -i s#10.0.0.151#10.0.0.153#g 30019/conf/mongodb.conf

[mongod@db03 /data/mongodb]$ sed -i s#10.0.0.151#10.0.0.153#g 30020/conf/mongodb.confstep 3 启动节点

| mongod -f /目录/mongodb/端口号/配置文件 |

#主机1

[mongod@db01 /data/mongodb]$ for i in 30019 30020;do mongod -f $i/conf/mongodb.conf;done

about to fork child process, waiting until server is ready for connections.

forked process: 8218

child process started successfully, parent exiting

about to fork child process, waiting until server is ready for connections.

forked process: 8250

child process started successfully, parent exiting#主机2

[mongod@db02 /data/mongodb]$ for i in 30019 30020;do mongod -f $i/conf/mongodb.conf;done

about to fork child process, waiting until server is ready for connections.

forked process: 8121

child process started successfully, parent exiting

about to fork child process, waiting until server is ready for connections.

forked process: 8149

child process started successfully, parent exiting#主机3

[mongod@db03 /data/mongodb]$ for i in 30019 30020;do mongod -f $i/conf/mongodb.conf;done

about to fork child process, waiting until server is ready for connections.

forked process: 7968

child process started successfully, parent exiting

about to fork child process, waiting until server is ready for connections.

forked process: 7996

child process started successfully, parent exitingstep 4 搭建复制集

|

mongo -u 用户名 -p 密码 --port 端口号 IP地址/admin config = {_id: '复制集名称', members: [

{_id: 0, host: '实例IP地址:端口号'},

{_id: 1, host: '实例IP地址:端口号'},

{_id: 2, host: '实例IP地址:端口号',"arbiterOnly":true}]

}

rs.initiate(config) |

#主机2

[mongod@db02 /data/mongodb]$ mongo --port 30019 admin

......

> config = {_id: 'sh1', members:[

... {_id: 0, host: '10.0.0.152:30019'},

... {_id: 1, host: '10.0.0.153:30019'},

... {_id: 2, host: '10.0.0.151:30019',"arbiterOnly": true}]

... }

{

"_id" : "sh1",

"members" : [

{

"_id" : 0,

"host" : "10.0.0.152:30019"

},

{

"_id" : 1,

"host" : "10.0.0.153:30019"

},

{

"_id" : 2,

"host" : "10.0.0.151:30019",

"arbiterOnly" : true

}

]

}

> rs.initiate(config)

{ "ok" : 1 }

sh1:SECONDARY>

sh1:PRIMARY> #主机3

[mongod@db03 /data/mongodb]$ mongo --port 30020 admin

......

> config = {_id: 'sh2', members:[

... {_id: 0, host: '10.0.0.153:30020'},

... {_id: 1, host: '10.0.0.152:30020'},

... {_id: 2, host: '10.0.0.151:30020',"arbiterOnly": true}]

... }

{

"_id" : "sh2",

"members" : [

{

"_id" : 0,

"host" : "10.0.0.153:30020"

},

{

"_id" : 1,

"host" : "10.0.0.152:30020"

},

{

"_id" : 2,

"host" : "10.0.0.151:30020",

"arbiterOnly" : true

}

]

}

> rs.initiate(config)

{ "ok" : 1 }

sh2:SECONDARY>

sh2:PRIMARY> 3.部署ConfigServer节点

在3.4版本以前,configserver可以是一个节点,官方建议使用复制集;但3.4版本以后要求configserver必须是复制集,且不支持Arbiter

step 1 创建所需目录

|

mkdir -p /目录/mongodb/端口号/{conf,data,logs} chown -R mongod.mongod /目录/mongodb |

#主机1

[mongod@db01 /data/mongodb]$ mkdir 30018/{conf,data,logs} -p

[mongod@db01 /data/mongodb]$ ll -d 30018 && ll 30018

drwxrwxr-x 5 mongod mongod 42 Dec 10 15:21 30018

total 0

drwxrwxr-x 2 mongod mongod 6 Dec 10 15:21 conf

drwxrwxr-x 2 mongod mongod 6 Dec 10 15:21 data

drwxrwxr-x 2 mongod mongod 6 Dec 10 15:21 logs#主机2

[mongod@db02 /data/mongodb]$ mkdir 30018/{conf,data,logs} -p

[mongod@db02 /data/mongodb]$ ll -d 30018 && ll 30018

drwxrwxr-x 5 mongod mongod 42 Dec 10 15:23 30018

total 0

drwxrwxr-x 2 mongod mongod 6 Dec 10 15:23 conf

drwxrwxr-x 2 mongod mongod 6 Dec 10 15:23 data

drwxrwxr-x 2 mongod mongod 6 Dec 10 15:23 logs#主机3

[mongod@db03 /data/mongodb]$ mkdir 30018/{conf,data,logs} -p

[mongod@db03 /data/mongodb]$ ll -d 30018 && ll 30018

drwxrwxr-x 5 mongod mongod 42 Dec 10 15:23 30018

total 0

drwxrwxr-x 2 mongod mongod 6 Dec 10 15:23 conf

drwxrwxr-x 2 mongod mongod 6 Dec 10 15:23 data

drwxrwxr-x 2 mongod mongod 6 Dec 10 15:23 logsstep 2 准备配置文件

|

systemLog:

destinaion: file

path: "/目录/文件名称"

logAppend: true

storage:

journal:

enabled: true

dbPath: "/目录"

directoryPerDB: true

#engine: wiredTiger

wiredTiger: #MongoDB 3.0以上版本默认引擎为wT

engineConfig:

cacheSizeGB: n #设置存储引擎缓冲区为 n GB(默认占用系统所有内存资源)

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib #开启数据压缩

indexConfig:

prefixCompression: true

net:

bindIp: IP地址

port: 端口号

replication:

oplogSizeMB: m #设置操作日志(相当于MySQL中的二进制日志)的存储空间

replSetName: 复制集名称 #定义复制集名称

sharding:

clusterRole: configsvr #指定集群角色为配置节点

processManagement:

fork: true

pidFilePath: "/目录/文件名称"

|

# 配置文件模板

systemLog:

destination: file

path: "/data/mongodb/30018/logs/mongodb.log"

logAppend: true

storage:

journal:

enabled: true

dbPath: "/data/mongodb/30018/data/"

directoryPerDB: true

engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

net:

bindIp: 10.0.0.151,127.0.0.1

port: 30018

replication:

oplogSizeMB: 2048

replSetName: sh_config

sharding:

clusterRole: configsvr

processManagement:

fork: true

pidFilePath: "/data/mongodb/30018/conf/mongodb.pid"#主机1

[mongod@db01 /data/mongodb]$ ll 30018/conf/mongodb.conf

-rw-r--r-- 1 mongod mongod 627 Dec 10 15:28 30018/conf/mongodb.conf#主机2

[mongod@db02 /data/mongodb]$ scp -rp root@10.0.0.151:/data/mongodb/30018/conf/mongodb.conf 30018/conf/

root@10.0.0.151's password:

mongodb.conf 100% 627 870.6KB/s 00:00

[mongod@db02 /data/mongodb]$ sed -i s#10.0.0.151#10.0.0.152#g 30018/conf/mongodb.conf

[mongod@db02 /data/mongodb]$ ll 30018/conf/mongodb.conf

-rw-r--r-- 1 mongod mongod 627 Dec 10 15:31 30018/conf/mongodb.conf#主机3

[mongod@db03 /data/mongodb]$ scp -rp root@10.0.0.151:/data/mongodb/30018/conf/mongodb.conf 30018/conf/

root@10.0.0.151's password:

mongodb.conf 100% 627 735.5KB/s 00:00

[mongod@db03 /data/mongodb]$ sed -i s#10.0.0.151#10.0.0.153#g 30018/conf/mongodb.conf

[mongod@db03 /data/mongodb]$ ll 30018/conf/mongodb.conf

-rw-r--r-- 1 mongod mongod 627 Dec 10 15:32 30018/conf/mongodb.confstep 3 启动节点

| mongod -f /目录/mongodb/端口号/配置文件 |

#主机1

[mongod@db01 /data/mongodb]$ mongod -f 30018/conf/mongodb.conf

about to fork child process, waiting until server is ready for connections.

forked process: 8467

child process started successfully, parent exiting#主机2

[mongod@db02 /data/mongodb]$ mongod -f 30018/conf/mongodb.conf

about to fork child process, waiting until server is ready for connections.

forked process: 8416

child process started successfully, parent exiting#主机3

[mongod@db03 /data/mongodb]$ mongod -f 30018/conf/mongodb.conf

about to fork child process, waiting until server is ready for connections.

forked process: 8186

child process started successfully, parent exitingstep 4 搭建复制集

|

mongo -u 用户名 -p 密码 --port 端口号 IP地址/admin

config = {_id: '复制集名称', members: [

{_id: 0, host: '实例IP地址:端口号'},

{_id: 1, host: '实例IP地址:端口号'},

{_id: 2, host: '实例IP地址:端口号'}]

}

rs.initiate(config) |

#主机1

[mongod@db01 /data/mongodb]$ mongo --port 30018 admin

......

> config = {_id: 'sh_config', members:[

... {_id: 0, host: '10.0.0.151:30018'},

... {_id: 1, host: '10.0.0.152:30018'},

... {_id: 2, host: '10.0.0.153:30018'}]

... }

{

"_id" : "sh_config",

"members" : [

{

"_id" : 0,

"host" : "10.0.0.151:30018"

},

{

"_id" : 1,

"host" : "10.0.0.152:30018"

},

{

"_id" : 2,

"host" : "10.0.0.153:30018"

}

]

}

> rs.initiate(config)

{

"ok" : 1,

"operationTime" : Timestamp(1639121780, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(1639121780, 1),

"electionId" : ObjectId("000000000000000000000000")

},

"$clusterTime" : {

"clusterTime" : Timestamp(1639121780, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

sh_config:SECONDARY>

sh_config:PRIMARY>4.部署Mongos节点

step 1 创建所需目录

|

mkdir -p /目录/mongodb/端口号/{conf,logs} chown -R mongod.mongod /目录/mongodb |

#主机1

[mongod@db01 /data/mongodb]$ mkdir -p 30017/{conf,logs}

[mongod@db01 /data/mongodb]$ ll -d 30017;ll 30017

drwxrwxr-x 4 mongod mongod 30 Dec 10 15:39 30017

total 0

drwxrwxr-x 2 mongod mongod 6 Dec 10 15:39 conf

drwxrwxr-x 2 mongod mongod 6 Dec 10 15:39 logsstep 2 准备配置文件

|

systemLog:

destination: file

path: "/目录/文件名称"

logAppend: true

net:

bindIp: IP地址

port: 端口号

sharding:

configDB: 配置服务器-复制集名称/配置服务器1-IP地址:端口号,配置服务器2-IP地址:端口号,配置服务器3-IP地址:端口号 #指定配置节点地址

processManagement:

fork: true

pidFilePath: "/目录/文件名称"

|

#主机1

[mongod@db01 /data/mongodb]$ cat 30017/conf/mongodb.conf

systemLog:

destination: file

path: "/data/mongodb/30017/logs/mongodb.log"

logAppend: true

net:

bindIp: 10.0.0.151,127.0.0.1

port: 30017

sharding:

configDB: sh_config/10.0.0.151:30018,10.0.0.152:30018,10.0.0.153:30018

processManagement:

fork: true

pidFilePath: "/data/mongodb/30017/conf/mongodb.pid"step 3 启动节点

| mongos -f /目录/mongodb/端口号/配置文件 |

#主机1

[mongod@db01 /data/mongodb]$ cd 30017/conf/

[mongod@db01 /data/mongodb/30017/conf]$ mongos -f mongodb.conf

about to fork child process, waiting until server is ready for connections.

forked process: 8789

child process started successfully, parent exiting5.集群添加节点

step 1 连接Mongos

|

su - mongod mongo -u 用户名 -p 密码 --port 端口号 IP地址/admin |

[mongod@db01 /data/mongodb/30017/conf]$ mongo --port 30017 admin

MongoDB shell version v3.6.23

connecting to: mongodb://127.0.0.1:30017/admin?gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("11faa6ed-9678-437a-804e-e82bcea9019e") }

MongoDB server version: 3.6.23

Server has startup warnings:

2021-12-10T15:56:40.406+0800 I CONTROL [main]

2021-12-10T15:56:40.406+0800 I CONTROL [main] ** WARNING: Access control is not enabled for the database.

2021-12-10T15:56:40.406+0800 I CONTROL [main] ** Read and write access to data and configuration is unrestricted.

2021-12-10T15:56:40.406+0800 I CONTROL [main]

mongos> step 2 添加分片

| db.runCommand( { addshard : "存储节点复制集名称/存储节点1-IP地址:端口号,存储节点2-IP地址:端口号,存储节点3-IP地址:端口号",name:"shardID"} ) |

mongos> db.runCommand( { addshard: "sh1/10.0.0.152:30019,10.0.0.153:30019,10.0.0.151:30019", name: "shared1" } )

{

"shardAdded" : "shared1",

"ok" : 1,

"operationTime" : Timestamp(1639127104, 7),

"$clusterTime" : {

"clusterTime" : Timestamp(1639127104, 7),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> db.runCommand( { addshard: "sh2/10.0.0.152:30020,10.0.0.153:30020,10.0.0.151:30020", name: "shared2" } )

{

"shardAdded" : "shared2",

"ok" : 1,

"operationTime" : Timestamp(1639127124, 5),

"$clusterTime" : {

"clusterTime" : Timestamp(1639127124, 5),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}step 3 列出分片

仅列出有效的存储节点,不显示Arbiter节点

|

use admin db.runCommand({ listshards : 1 }) |

mongos> db.runCommand( {listshards: 1} )

{

"shards" : [

{

"_id" : "shared1",

"host" : "sh1/10.0.0.152:30019,10.0.0.153:30019",

"state" : 1

},

{

"_id" : "shared2",

"host" : "sh2/10.0.0.152:30020,10.0.0.153:30020",

"state" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1639127174, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1639127174, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}step 4 查看集群状态

| sh.status(); |

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("61b3038192a6c74e8b9b8d61")

}

shards:

{ "_id" : "shared1", "host" : "sh1/10.0.0.152:30019,10.0.0.153:30019", "state" : 1 }

{ "_id" : "shared2", "host" : "sh2/10.0.0.152:30020,10.0.0.153:30020", "state" : 1 }

active mongoses:

"3.6.23" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }六、MongoDB Sharding Cluster分片

实际使用中,建议使用经常查询的键作为分片键。

1. RANGE分片配置

step 1 激活数据库分片功能

|

su - mongod mongo -u 用户名 -p 密码 IP地址:端口号/admin #登录mongos节点 db.runCommand( { enablesharding : "数据库名称" } ) #对指定数据库启用数据分片功能 |

[root@db01 ~]# su - mongod

Last login: Tue Dec 14 13:16:03 CST 2021 on pts/0

[mongod@db01 ~]$ mongo 10.0.0.151:30017/admin

MongoDB shell version v3.6.23

connecting to: mongodb://10.0.0.151:30017/admin?gssapiServiceName=mongodb

......

mongos> db.runCommand( { enablesharding: "Young" } )

{

"ok" : 1,

"operationTime" : Timestamp(1639460767, 8),

"$clusterTime" : {

"clusterTime" : Timestamp(1639460767, 8),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

step 2 创建索引

|

use 数据库 db.表名.ensureIndex( { 索引字段 : 1 } ) #以指定字段建立索引 |

mongos> use Young

switched to db Young

mongos> db.test.ensureIndex( { id: 1 } )

{

"raw" : {

"sh2/10.0.0.152:30020,10.0.0.153:30020" : {

"createdCollectionAutomatically" : true,

"numIndexesBefore" : 1,

"numIndexesAfter" : 2,

"ok" : 1

}

},

"ok" : 1,

"operationTime" : Timestamp(1639460811, 3),

"$clusterTime" : {

"clusterTime" : Timestamp(1639460811, 3),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}step 3 开启分片

|

use admin db.runCommand( {shardcollection : "数据库名称.表名",key : { 索引字段:1 } } ) #为指定的库和表定制RANGE分片策略,默认从小至大排序; |

mongos> use admin

switched to db admin

mongos> db.runCommand( { shardcollection: "Young.test", key: {id:1} } )

{

"collectionsharded" : "Young.test",

"collectionUUID" : UUID("9b7de174-a6e3-4270-81b7-61c5add5166b"),

"ok" : 1,

"operationTime" : Timestamp(1639461093, 8),

"$clusterTime" : {

"clusterTime" : Timestamp(1639461093, 8),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}step 4 模拟1000万行数据

|

use 数据库 for(i=0;i<10000000;i++) { db.表名.insert({"id":i,"name":"Han","age":27,"date":new Date()}); } db.表名.stats() |

mongos> use Young

switched to db Young

mongos> for(i=0;i<10000000;i++) { db.test.insert( { "id": i, "name": "Han", "age": 27, "date": new Date() } ); }

WriteResult({ "nInserted" : 1 })step 5 分片结果验证

|

mongo -u 用户名 -p 密码 IP地址:端口号 db.表名.count(); #对指定数据库启用数据分片功能 |

[mongod@db02 ~]$ mongo 10.0.0.153:30019

MongoDB shell version v3.6.23

......

sh1:PRIMARY> use Young

switched to db Young

sh1:PRIMARY> db.test.count()

5079450

[mongod@db02 ~]$ mongo 10.0.0.152:30020

MongoDB shell version v3.6.23

......

sh2:PRIMARY> use Young

switched to db Young

sh2:PRIMARY> db.test.count()

49186962.Hash分片配置

Hash分片和RANGE分片相比,数据分布更均匀;在实际环境中,Hash使用的更多一些。

step 1 激活数据库分片功能

|

su - mongod mongo -u 用户名 -p 密码 IP地址:端口号/admin #登录mongos节点 db.runCommand( {enablesharding : "数据库名称" } ) #对指定数据库启用数据分片功能 |

[root@db01 ~]# su - mongod

Last login: Tue Dec 14 13:18:40 CST 2021 on pts/0

[mongod@db01 ~]$ mongo 10.0.0.151:30017/admin

MongoDB shell version v3.6.23

connecting to: mongodb://10.0.0.151:30017/admin?gssapiServiceName=mongodb

......

mongos> db.runCommand( { enablesharding: "Aspen" } )

{

"ok" : 1,

"operationTime" : Timestamp(1639462737, 508),

"$clusterTime" : {

"clusterTime" : Timestamp(1639462737, 512),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}step 2 创建索引

|

use 数据库 db.表名.ensureIndex( {索引字段 : "hashed" } ) #以指定字段建立哈希索引 |

mongos> use Aspen

switched to db Aspen

mongos> db.test.ensureIndex( { id: "hashed" } )

{

"raw" : {

"sh1/10.0.0.152:30019,10.0.0.153:30019" : {

"createdCollectionAutomatically" : true,

"numIndexesBefore" : 1,

"numIndexesAfter" : 2,

"ok" : 1

}

},

"ok" : 1,

"operationTime" : Timestamp(1639462832, 297),

"$clusterTime" : {

"clusterTime" : Timestamp(1639462832, 297),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}step 3 开启分片

|

use admin sh.shardCollection( "数据库名称.表名", { 索引字段: "hashed" } ) #为指定的库和表定制Hash分片策略,默认从小至大排序; |

mongos> use admin

switched to db admin

mongos> sh.shardCollection( "Aspen.test", {id: "hashed"} )

{

"collectionsharded" : "Aspen.test",

"collectionUUID" : UUID("f5638ee5-9558-4b7b-8fec-987d2ef1c2aa"),

"ok" : 1,

"operationTime" : Timestamp(1639463219, 33),

"$clusterTime" : {

"clusterTime" : Timestamp(1639463219, 33),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}step 4 模拟1000万行数据

|

use 数据库 for(i=0;i<10000000;i++) { db.表名.insert({"id":i,"name":"Han","age":27,"date":new Date()});} db.表名.stats() |

mongos> use Aspen

switched to db Aspen

mongos> for(i=0;i<10000000;i++) { db.test.insert( { "id": i, "name": "Han", "age": 29, "date": new Date() } ); }

WriteResult({ "nInserted" : 1 })step 5 分片结果验证

|

mongod -u 用户名 -p 密码 IP地址:端口号 db.表名.count(); #对指定数据库启用数据分片功能 |

[mongod@db02 ~]$ mongo 10.0.0.152:30020

MongoDB shell version v3.6.23

......

sh2:PRIMARY> use Aspen

switched to db Aspen

sh2:PRIMARY> db.test.count()

5368729

sh2:PRIMARY>

bye

[mongod@db02 ~]$ mongo 10.0.0.153:30019/Aspen

MongoDB shell version v3.6.23

......

sh1:PRIMARY> db.test.count(

... )

56841723.分片集群查询

查询分片集群状态

| db.runCommand({ isdbgrid : 1 }) |

mongos> db.runCommand( { isdbgrid : 1 } )

{

"isdbgrid" : 1,

"hostname" : "db01",

"ok" : 1,

"operationTime" : Timestamp(1639465804, 538),

"$clusterTime" : {

"clusterTime" : Timestamp(1639465804, 538),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}查询分片集群分片信息

|

use admin db.runCommand({ listshards : 1 }) |

mongos> use admin

switched to db admin

mongos> db.runCommand

db.runCommand( db.runCommandWithMetadata(

mongos> db.runCommand( { listshards : 1 } )

{

"shards" : [

{

"_id" : "shared1",

"host" : "sh1/10.0.0.152:30019,10.0.0.153:30019",

"state" : 1

},

{

"_id" : "shared2",

"host" : "sh2/10.0.0.152:30020,10.0.0.153:30020",

"state" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1639466261, 780),

"$clusterTime" : {

"clusterTime" : Timestamp(1639466261, 780),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}查询开启分片集群的数据库

|

use config db.databases.find({ "partitioned": true }) |

mongos> db.databases.find( { "partitioned" : true } )

{ "_id" : "Young", "primary" : "shared2", "partitioned" : true }

{ "_id" : "Aspen", "primary" : "shared1", "partitioned" : true }查询所有数据分片情况

|

use config db.databases.find() |

mongos> db.databases.find()

{ "_id" : "Young", "primary" : "shared2", "partitioned" : true }

{ "_id" : "Aspen", "primary" : "shared1", "partitioned" : true }查询分片的分片键

|

use config db.collections.find().pretty() |

mongos> use config

switched to db config

mongos> db.collections.find().pretty()

{

"_id" : "config.system.sessions",

"lastmodEpoch" : ObjectId("61b3190a92a6c74e8b9be0a7"),

"lastmod" : ISODate("1970-02-19T17:02:47.296Z"),

"dropped" : false,

"key" : {

"_id" : 1

},

"unique" : false,

"uuid" : UUID("b5ac344c-a3f4-467a-8dbe-adefcf797632")

}

{

"_id" : "Young.test",

"lastmodEpoch" : ObjectId("61b830e5398300a219d904d7"),

"lastmod" : ISODate("1970-02-19T17:02:47.296Z"),

"dropped" : false,

"key" : {

"id" : 1

},

"unique" : false,

"uuid" : UUID("9b7de174-a6e3-4270-81b7-61c5add5166b")

}

{

"_id" : "Aspen.test",

"lastmodEpoch" : ObjectId("61b83933398300a219d93d72"),

"lastmod" : ISODate("1970-02-19T17:02:47.297Z"),

"dropped" : false,

"key" : {

"id" : "hashed"

},

"unique" : false,

"uuid" : UUID("f5638ee5-9558-4b7b-8fec-987d2ef1c2aa")

}查询分片的详细信息

chunk是MongoDB分片集群的最小存储单元,每个chunk默认64M,存满后会自动分裂。

| sh.status() |

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("61b3038192a6c74e8b9b8d61")

}

shards:

{ "_id" : "shared1", "host" : "sh1/10.0.0.152:30019,10.0.0.153:30019", "state" : 1 }

{ "_id" : "shared2", "host" : "sh2/10.0.0.152:30020,10.0.0.153:30020", "state" : 1 }

active mongoses:

"3.6.23" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

12 : Success

databases:

{ "_id" : "Aspen", "primary" : "shared1", "partitioned" : true }

Aspen.test

shard key: { "id" : "hashed" }

unique: false

balancing: true

chunks:

shared1 5

shared2 5

{ "id" : { "$minKey" : 1 } } -->> { "id" : NumberLong("-6994122501772378439") } on : shared2 Timestamp(5, 0)

{ "id" : NumberLong("-6994122501772378439") } -->> { "id" : NumberLong("-4751297228397006543") } on : shared2 Timestamp(4, 1)

{ "id" : NumberLong("-4751297228397006543") } -->> { "id" : NumberLong("-4611686018427387902") } on : shared2 Timestamp(3, 4)

{ "id" : NumberLong("-4611686018427387902") } -->> { "id" : NumberLong(0) } on : shared2 Timestamp(3, 0)

{ "id" : NumberLong(0) } -->> { "id" : NumberLong("2086432348249647571") } on : shared2 Timestamp(6, 0)

{ "id" : NumberLong("2086432348249647571") } -->> { "id" : NumberLong("4164993257909830524") } on : shared1 Timestamp(6, 1)

{ "id" : NumberLong("4164993257909830524") } -->> { "id" : NumberLong("4611686018427387902") } on : shared1 Timestamp(4, 7)

{ "id" : NumberLong("4611686018427387902") } -->> { "id" : NumberLong("6768181353365070074") } on : shared1 Timestamp(4, 2)

{ "id" : NumberLong("6768181353365070074") } -->> { "id" : NumberLong("8924138232636859280") } on : shared1 Timestamp(4, 3)

{ "id" : NumberLong("8924138232636859280") } -->> { "id" : { "$maxKey" : 1 } } on : shared1 Timestamp(4, 4)

{ "_id" : "Young", "primary" : "shared2", "partitioned" : true }

Young.test

shard key: { "id" : 1 }

unique: false

balancing: true

chunks:

shared1 7

shared2 7

{ "id" : { "$minKey" : 1 } } -->> { "id" : 1 } on : shared1 Timestamp(7, 1)

{ "id" : 1 } -->> { "id" : 500001 } on : shared1 Timestamp(3, 0)

{ "id" : 500001 } -->> { "id" : 750002 } on : shared2 Timestamp(8, 1)

{ "id" : 750002 } -->> { "id" : 1000002 } on : shared2 Timestamp(3, 2)

{ "id" : 1000002 } -->> { "id" : 1352219 } on : shared2 Timestamp(3, 3)

{ "id" : 1352219 } -->> { "id" : 1602219 } on : shared1 Timestamp(4, 2)

{ "id" : 1602219 } -->> { "id" : 2005945 } on : shared1 Timestamp(4, 3)

{ "id" : 2005945 } -->> { "id" : 2255945 } on : shared2 Timestamp(5, 2)

{ "id" : 2255945 } -->> { "id" : 2584948 } on : shared2 Timestamp(5, 3)

{ "id" : 2584948 } -->> { "id" : 2834948 } on : shared1 Timestamp(6, 2)

{ "id" : 2834948 } -->> { "id" : 3133702 } on : shared1 Timestamp(6, 3)

{ "id" : 3133702 } -->> { "id" : 3383702 } on : shared2 Timestamp(7, 2)

{ "id" : 3383702 } -->> { "id" : 3650683 } on : shared2 Timestamp(7, 3)

{ "id" : 3650683 } -->> { "id" : { "$maxKey" : 1 } } on : shared1 Timestamp(8, 0)

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shared1 512

shared2 512

too many chunks to print, use verbose if you want to force print4.分片节点删除(谨慎操作)

step 1 确认balancer是否处于工作状态

| sh.getBalancerState() |

[mongod@db01 ~]$ mongo --port 30017

MongoDB shell version v3.6.23

......

mongos> sh.getBalancerState()

truestep 2 删除节点(先转移数据[复制],再下线节点)

删除操作一定会立即触发balancer。

|

mongo -u 用户名 -p 密码 IP地址:端口号/admin #登录mongos节点 db.runCommand( {removeShard: "节点名称" } ) |

mongos> db.runCommand( { removeShard: "shared2" } )

{

"msg" : "draining started successfully",

"state" : "started",

"shard" : "shared2",

"note" : "you need to drop or movePrimary these databases",

"dbsToMove" : [

"Young"

],

"ok" : 1,

"operationTime" : Timestamp(1639530676, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1639530676, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}七、Balancer

1.概述

Mongos的一个重要功能就是自动巡查所有shared节点上的chunk情况,自动进行chunk迁移;而chunk迁移这个工作就是由Balancer完成的。

2.运行时间

- 自动运行,自动检测系统繁忙度,将会在系统繁忙度较低的时间进行自动迁移;

- 删除节点时,立即开始迁移工作;

- 在预设定的时间窗口内运行;

3.设置自动平衡(Balancer工作时间段)

|

Balancer时间窗口设定注意事项 1.选择系统相对繁忙度较低的时间段 2.避开MongoDB备份时间段 |

|

mongo -u 用户名 -p 密码 IP地址:端口号 #登录mongos节点 use config sh.setBalancerState( true ) #开启balancer db.settings.update({ _id : "balancer" }, { $set : { activeWindow : { start : "起始时间", stop : "结束时间" } } }, true) #指定balancer工作时间窗口 sh.getBalancerWindow() #查看balancer工作时窗口 sh.status() |

[mongod@db01 ~]$ mongo --port 30017 config

MongoDB shell version v3.6.23

......

mongos> db;

config

mongos> sh.setBalancerState( true )

{

"ok" : 1,

"operationTime" : Timestamp(1639533969, 3),

"$clusterTime" : {

"clusterTime" : Timestamp(1639533969, 3),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongos> db.settings.update( { _id: "balancer"}, { $set: {activeWindow: { start: "2:00", stop: "4:00" } } }, true )

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

mongos> sh.getBalancerState()

true

mongos> sh.getBalancerWindow()

{ "start" : "2:00", "stop" : "4:00" }

mongos> sh.status()

--- Sharding Status ---

......

balancer:

Currently enabled: yes

Currently running: no

Balancer active window is set between 2:00 and 4:00 server local time

......|

sh.disableBalancing( "数据库.表" ) #关闭指定集合的balancer sh.enableBalancing( "数据库.表" ) #开启指定集合的balancer db.getSiblingDB("config").collections.findOne({_id : "数据库.表"}).noBalance; #查询指定集合的balancer状态 |

mongos> sh.disableBalancing( "Aspen.test" )

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

mongos> db.getSiblingDB( "config" ).collections.findOne( {_id: "Aspen.test" } ).noBalance;

true

mongos> sh.enableBalancing( "Aspen.test" )

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

mongos> db.getSiblingDB( "config" ).collections.findOne( {_id: "Aspen.test" } ).noBalance;

false八、MongoDB的备份与恢复

1.备份/恢复工具介绍

- mongoexport / mongoimport

- mongodump / mongorestore

| 备份/恢复工具 | 备份模式 | 适用场景 | 备份文件格式 |

|---|---|---|---|

| mongoexport mongoimport | 逻辑备份 | 1. 异构平台迁移;如MySQL <--> MongoDB 2.通平台,跨大版本迁移;如MongoDB 2 <--> MongoDB 3 |

json/csv |

| mongodump mongorestore | 物理备份 | 日常备份与恢复使用 | bson |

不同版本的bson文件存储结构略有不同,因此bson不能用于MongoDB跨大版本迁移。

2.备份恢复工具

| mongoexport/mongoimport只能进行单表导入/导出,无法批量进行导入/导出; |

mongoexport(导出工具)

|

mongoexport --help #获取mongoexport使用帮助

参数说明 |

大部分数据库都支持csv格式文件,csv文件主要用于解决不同数据库间数据迁移兼容性问题。

# 备份整表 json格式

[mongod@db01 ~]$ mongoexport -uroot -p123456 -h 10.0.0.151 --port 27017 -d Young -c han -o ~/test.json --authenticationDatabase admin

2021-12-15T11:41:42.964+0800 connected to: 10.0.0.151:27017

2021-12-15T11:41:43.121+0800 exported 10000 records

[mongod@db01 ~]$ tail -5 test.json

{"_id":{"$oid":"61b9610e53ed183cb735e70d"},"id":9995.0,"Op":"Young","event":"mongodb","instansID":27017.0,"date":{"$date":"2021-12-15T03:29:18.652Z"}}

{"_id":{"$oid":"61b9610e53ed183cb735e70e"},"id":9996.0,"Op":"Young","event":"mongodb","instansID":27017.0,"date":{"$date":"2021-12-15T03:29:18.652Z"}}

{"_id":{"$oid":"61b9610e53ed183cb735e70f"},"id":9997.0,"Op":"Young","event":"mongodb","instansID":27017.0,"date":{"$date":"2021-12-15T03:29:18.653Z"}}

{"_id":{"$oid":"61b9610e53ed183cb735e710"},"id":9998.0,"Op":"Young","event":"mongodb","instansID":27017.0,"date":{"$date":"2021-12-15T03:29:18.655Z"}}

{"_id":{"$oid":"61b9610e53ed183cb735e711"},"id":9999.0,"Op":"Young","event":"mongodb","instansID":27017.0,"date":{"$date":"2021-12-15T03:29:18.655Z"}}

# 备份指定列 csv格式

[mongod@db01 ~]$ mongoexport -uroot -p123456 -h 10.0.0.151 --port 27017 -d Young -c han -f id,Op,event,instansID,date --type=csv -o ~/test.csv --authenticationDatabase admin

2021-12-15T13:26:57.283+0800 connected to: 10.0.0.151:27017

2021-12-15T13:26:57.348+0800 exported 10000 records

[mongod@db01 ~]$ head -5 test.csv

id,Op,event,instansID,date

0,Young,mongodb,27017,2021-12-15T03:29:16.438Z

1,Young,mongodb,27017,2021-12-15T03:29:16.443Z

2,Young,mongodb,27017,2021-12-15T03:29:16.443Z

3,Young,mongodb,27017,2021-12-15T03:29:16.444Zmongoimport(导入工具)

|

mongoimport --help #获取mongoexport使用帮助

参数说明 |

# 恢复json

[mongod@db01 ~]$ mongoimport -uroot -p123456 -h 10.0.0.151 --port 27017 -d Aspen -c log --authenticationDatabase admin --file test.json

2021-12-15T13:38:22.686+0800 connected to: 10.0.0.151:27017

2021-12-15T13:38:22.895+0800 imported 10000 documents

[mongod@db01 ~]$ mongo -uroot -p123456 admin

MongoDB shell version v3.6.23

......

> use Aspen

switched to db Aspen

> show tables;

log

> db.log.find( {"id": {$gt:1, $lt:6}} )

{ "_id" : ObjectId("61b9610c53ed183cb735c004"), "id" : 2, "Op" : "Young", "event" : "mongodb", "instansID" : 27017, "date" : ISODate("2021-12-15T03:29:16.443Z") }

{ "_id" : ObjectId("61b9610c53ed183cb735c005"), "id" : 3, "Op" : "Young", "event" : "mongodb", "instansID" : 27017, "date" : ISODate("2021-12-15T03:29:16.444Z") }

{ "_id" : ObjectId("61b9610c53ed183cb735c006"), "id" : 4, "Op" : "Young", "event" : "mongodb", "instansID" : 27017, "date" : ISODate("2021-12-15T03:29:16.444Z") }

{ "_id" : ObjectId("61b9610c53ed183cb735c007"), "id" : 5, "Op" : "Young", "event" : "mongodb", "instansID" : 27017, "date" : ISODate("2021-12-15T03:29:16.444Z") }

# 恢复csv --headerline(csv文件首行为列名)

[mongod@db01 ~]$ mongoimport -uroot -p123456 -h 10.0.0.151 --port 27017 -d Aspen -c test --type=csv --headerline --authenticationDatabase admin --file test.csv

2021-12-15T13:46:05.646+0800 connected to: 10.0.0.151:27017

2021-12-15T13:46:05.721+0800 imported 10000 documents

> db.test.find( { "id": {$gte: 0, $lt: 5}} )

{ "_id" : ObjectId("61b9811d835e0eb6f31edf4f"), "id" : 0, "Op" : "Young", "event" : "mongodb", "instansID" : 27017, "date" : "2021-12-15T03:29:16.438Z" }

{ "_id" : ObjectId("61b9811d835e0eb6f31edf50"), "id" : 1, "Op" : "Young", "event" : "mongodb", "instansID" : 27017, "date" : "2021-12-15T03:29:16.443Z" }

{ "_id" : ObjectId("61b9811d835e0eb6f31edf51"), "id" : 2, "Op" : "Young", "event" : "mongodb", "instansID" : 27017, "date" : "2021-12-15T03:29:16.443Z" }

{ "_id" : ObjectId("61b9811d835e0eb6f31edf52"), "id" : 3, "Op" : "Young", "event" : "mongodb", "instansID" : 27017, "date" : "2021-12-15T03:29:16.444Z" }

{ "_id" : ObjectId("61b9811d835e0eb6f31edf53"), "id" : 4, "Op" : "Young", "event" : "mongodb", "instansID" : 27017, "date" : "2021-12-15T03:29:16.444Z" }

# 恢复csv -f 键(csv文件首行为数据)

[mongod@db01 ~]$ sed -i '1d' test.json

[mongod@db01 ~]$ head -1 test.json

{"_id":{"$oid":"61b9610c53ed183cb735c003"},"id":1.0,"Op":"Young","event":"mongodb","instansID":27017.0,"date":{"$date":"2021-12-15T03:29:16.443Z"}}

[mongod@db01 ~]$ mongoimport -uroot -p123456 -h 10.0.0.151 --port 27017 -d Aspen -c csv.test --type=csv -f id,Op,event,instanceID,date --authenticationDatabase admin --file test.csv

2021-12-15T14:01:53.142+0800 connected to: 10.0.0.151:27017

2021-12-15T14:01:53.221+0800 imported 10001 documents

[mongod@db01 ~]$ mongo -uroot -p123456 admin

MongoDB shell version v3.6.23

......

> use Aspen

switched to db Aspen

> show tables;

csv.test

log

test

> db.csv.test.find( { "id": {$gte: 0, $lt: 3} } )

{ "_id" : ObjectId("61b984d1835e0eb6f31f2dda"), "id" : 0, "Op" : "Young", "event" : "mongodb", "instanceID" : 27017, "date" : "2021-12-15T03:29:16.438Z" }

{ "_id" : ObjectId("61b984d1835e0eb6f31f2ddb"), "id" : 1, "Op" : "Young", "event" : "mongodb", "instanceID" : 27017, "date" : "2021-12-15T03:29:16.443Z" }

{ "_id" : ObjectId("61b984d1835e0eb6f31f2ddc"), "id" : 2, "Op" : "Young", "event" : "mongodb", "instanceID" : 27017, "date" : "2021-12-15T03:29:16.443Z" }mongodump(备份工具)

mongodump仅备份admin库和业务库,不会备份local库和config库;

|

工作原理 mongodump备份是对运行的mongodb做查询,然后将所有查到的文档写入磁盘; 但是使用mongodump备份时,mongodump所产生的备份不一定是数据库的实时快照,如果在备份时MongoDB数据库进行了数据写入操作,则备份出来的文件可能与MongoDB的实时数据不一致;另外在备份时可能会其他客户端性能产生不利影响。 |

mongodump能够在MongoDB运行时进行备份,并且可以实现全备份、分库备份和分表备份。

|

mongodump --help #获取mongoexport使用帮助

参数说明 |

|

注意 --oplog不能与--gzip同时使用,会破坏备份的oplog日志内容; |

[mongod@db01 ~]$ cd /data/mongodb/

[mongod@db01 /data/mongodb]$ mkdir backup

#全库备份

[mongod@db01 /data/mongodb]$ mongodump -uroot -p123456 -h 10.0.0.151 --port 27017 --authenticationDatabase admin -o /data/mongodb/backup/

2021-12-16T08:54:53.422+0800 writing admin.system.users to

2021-12-16T08:54:53.423+0800 done dumping admin.system.users (1 document)

......

2021-12-16T08:54:55.483+0800 done dumping world.city (4079 documents)

2021-12-16T08:54:56.375+0800 [........................] Young.t100w 101/1000000 (0.0%)

2021-12-16T08:54:59.342+0800 [########................] Young.t100w 341888/1000000 (34.2%)

2021-12-16T08:55:02.409+0800 [########################] Young.t100w 1000000/1000000 (100.0%)

2021-12-16T08:55:02.409+0800 done dumping Young.t100w (1000000 documents)

[mongod@db01 /data/mongodb]$ tree backup/

backup/

├── admin

│ ├── system.users.bson

│ ├── system.users.metadata.json

│ ├── system.version.bson

│ └── system.version.metadata.json

├── Aspen

│ ├── csv.test.bson

│ ├── csv.test.metadata.json

│ ├── log.bson

│ ├── log.metadata.json

│ ├── test.bson

│ └── test.metadata.json

├── world

│ ├── city.bson

│ └── city.metadata.json

└── Young

├── han.bson

├── han.metadata.json

├── t100w.bson

└── t100w.metadata.json

4 directories, 16 files

[mongod@db01 /data/mongodb]$ cat backup/world/city.metadata.json

{"options":{},"indexes":[{"v":2,"key":{"_id":1},"name":"_id_","ns":"world.city"}],"uuid":"a4d34d5a68ec40569008796d82263cc0"}

#分库备份

[mongod@db01 /data/mongodb]$ rm -rf backup/*

[mongod@db01 /data/mongodb]$ ll backup/

total 0

[mongod@db01 /data/mongodb]$ mongodump -uroot -p123456 -h 10.0.0.151 --port 27017 --authenticationDatabase admin -d world -o backup

2021-12-16T09:06:43.173+0800 writing world.city to

2021-12-16T09:06:43.179+0800 done dumping world.city (4079 documents)

[mongod@db01 /data/mongodb]$ tree backup/

backup/

└── world

├── city.bson

└── city.metadata.json

1 directory, 2 files

#压缩备份

[mongod@db01 /data/mongodb]$ mongodump -uroot -p123456 -h 10.0.0.151 --port 27017 --authenticationDatabase admin -d Young -c t100w --gzip -o backup

2021-12-16T09:09:48.588+0800 writing Young.t100w to

2021-12-16T09:09:51.589+0800 [##############..........] Young.t100w 603387/1000000 (60.3%)

2021-12-16T09:09:53.235+0800 [########################] Young.t100w 1000000/1000000 (100.0%)

2021-12-16T09:09:53.235+0800 done dumping Young.t100w (1000000 documents)

[mongod@db01 /data/mongodb]$ tree backup/

backup/

└── Young

├── t100w.bson.gz

└── t100w.metadata.json.gz

1 directory, 2 filesmongodump备份的文件为bson格式,可使用bsondump命令查看bson文件内容。

[mongod@db01 /data/mongodb]$ file backup/world/city.bson

backup/world/city.bson: data

[mongod@db01 /data/mongodb]$ bsondump backup/world/city.bson| head -3

{"_id":{"$oid":"61b9a061835e0eb6f31f5533"},"ID":1,"Name":"Kabul","CountryCode":"AFG","District":"Kabol","Population":1780000}

{"_id":{"$oid":"61b9a061835e0eb6f31f5534"},"ID":2,"Name":"Qandahar","CountryCode":"AFG","District":"Qandahar","Population":237500}

{"_id":{"$oid":"61b9a061835e0eb6f31f5535"},"ID":3,"Name":"Herat","CountryCode":"AFG","District":"Herat","Population":186800}

2021-12-16T09:01:26.537+0800 471 objects found

2021-12-16T09:01:26.537+0800 write /dev/stdout: broken pipemongorestore(恢复工具)

mongorestore能够实现从全备文件中,恢复指定的库和指定的表。

|

mongorestore --help #获取mongoexport使用帮助

参数说明 |

[mongod@db01 /data/mongodb]$ rm -rf backup/*

[mongod@db01 /data/mongodb]$ mongodump -uroot -p123456 -h 10.0.0.151 --port 27017 --authenticationDatabase admin --gzip -o backup/

2021-12-16T09:16:40.314+0800 writing admin.system.users to

......

2021-12-16T09:16:45.191+0800 done dumping Young.t100w (1000000 documents)

#单表恢复

[mongod@db01 /data/mongodb]$ mongorestore -uroot -p123456 -h 10.0.0.151 --port 27017 -d Han -c test --gzip --authenticationDatabase admin backup/Young/t100w.bson.gz

2021-12-16T09:28:19.346+0800 checking for collection data in backup/Young/t100w.bson.gz

......

2021-12-16T09:28:23.522+0800 no indexes to restore

2021-12-16T09:28:23.522+0800 finished restoring Han.test (1000000 documents)

2021-12-16T09:28:23.522+0800 done

[mongod@db01 /data/mongodb]$ mongo -uroot -p123456 admin

MongoDB shell version v3.6.23

......

> use Han

switched to db Han

> show collections

test

#单库恢复

[mongod@db01 /data/mongodb]$ mongorestore -uroot -p123456 -h 10.0.0.151 --port 27017 -d TEST --gzip --authenticationDatabase admin backup/Young/

......

2021-12-16T09:33:39.266+0800 done

[mongod@db01 /data/mongodb]$ mongo -uroot -p123456 admin

MongoDB shell version v3.6.23

......

> use TEST

switched to db TEST

> show tables;

han

t100w

> use Young

switched to db Young

> show tables;

han

t100w

#全库恢复

[mongod@db01 /data/mongodb]$ mongo -uroot -p123456 admin

......

> show dbs;

admin 0.000GB

config 0.000GB

local 0.000GB

[mongod@db01 /data/mongodb]$ mongorestore -uroot -p123456 -h 10.0.0.151 --port 27017 --gzip --authenticationDatabase admin backup/

2021-12-16T09:40:48.581+0800 preparing collections to restore from

......

2021-12-16T09:40:52.637+0800 done

[mongod@db01 /data/mongodb]$ mongo -uroot -p123456 admin

MongoDB shell version v3.6.23

......

> show dbs;

Aspen 0.002GB

Young 0.049GB

admin 0.000GB

config 0.000GB

local 0.000GB

world 0.000GB3.异构平台数据迁移(MySQL <--> MongoDB)

- 数据迁入MongoDB

step 1 MySQL开启安全路径

|

cat >>/目录/my.cnf<< EOF secure-file-priv=/备份路径 EOF |

[root@db01 ~]# sed -i '22 i\secure_file_priv=/data/mysql/backup' /etc/my.cnf

[root@db01 ~]# sed -n 22p /etc/my.cnf

secure_file_priv=/data/mysql/backupstep 2 重启数据库

| service mysqld restart |

[root@db01 ~]# systemctl restart mysqldstep 3 MySQL数据备份

| select * from 库名.表名 into outfile '/备份路径/文件名.csv' fields terminated by ','; |

|

参数 fields terminated by ',' #字段间以","分隔(默认为空格) optionally enclosed by '"' #字段以"括起 escaped by '"' #字段中使用的转义字符为" lines terminated by '\r\n' #行以\r\n结束 |

mysql> select * from world.city into outfile '/data/mysql/backup/city.csv' fields terminated by ',';

Query OK, 4079 rows affected (0.02 sec)step 4 处理备份文件

|

desc 库名.表名; #查看表结构 vim /备份路径/文件名.csv #添加第一行列名信息 |

[root@db01 ~]# mysql -e 'desc world.city;'

+-------------+----------+------+-----+---------+----------------+

| Field | Type | Null | Key | Default | Extra |

+-------------+----------+------+-----+---------+----------------+

| ID | int | NO | PRI | NULL | auto_increment |

| Name | char(35) | NO | | | |

| CountryCode | char(3) | NO | MUL | | |

| District | char(20) | NO | | | |

| Population | int | NO | | 0 | |

+-------------+----------+------+-----+---------+----------------+

[root@db01 ~]# sed -i '1 i\ID,Name,CountryCode,District,Population' /data/mysql/backup/city.csv

[root@db01 ~]# head -2 /data/mysql/backup/city.csv

ID,Name,CountryCode,District,Population

1,Kabul,AFG,Kabol,1780000

[root@db01 ~]# ll /data/mysql/backup/city.csv

-rw-r----- 1 mysql mysql 143605 Dec 15 15:52 /data/mysql/backup/city.csv

[root@db01 ~]# chmod o+r /data/mysql/backup/city.csv

[root@db01 ~]# ll /data/mysql/backup/city.csv

-rw-r--r-- 1 mysql mysql 143605 Dec 15 15:52 /data/mysql/backup/city.csvstep 5 MongoDB恢复数据

| mongoimport -u用户名 -p密码 -h IP地址 --port 端口号 --authenticationDatabase admin -d 库名 -c 表名 --type=csv -f 键1,键2,键3,... --file /备份路径/文件名.csv; |

[mongod@db01 ~]$ mongoimport -uroot -p123456 -h 10.0.0.151 --port 27017 -d world -c city --headerline --type=csv --authenticationDatabase=admin --file=/data/mysql/backup/city.csv

2021-12-15T15:59:29.806+0800 connected to: 10.0.0.151:27017

2021-12-15T15:59:29.843+0800 imported 4079 documentsstep 6 验证

|

mongo -u用户名 -p密码 -h IP地址 --port 端口号 --authenticationDatabase admin #登录数据库 use 库名 db.表名.count() db.表名.find() db.表名.find({键:值}).pretty() #按制定条件查询 |

[mongod@db01 ~]$ mongo -uroot -p123456 admin

usMongoDB shell version v3.6.23

......