一、Kubernetes介绍

Kubernetes又称为k8s,它是一款Docker容器的集群管理工具和编排工具。

1.核心功能

-

自愈:重新启动失败的容器;当节点不可用时,会将该节点的容器迁移到其他节点上运行;

-

弹性伸缩:通过监控容器的CPU负载值,根据定义的规则,自动增加和缩减容器数量;

-

服务自动发现与负载均衡: 自动发现新启动的容器并将其加入负载均衡;

-

滚动升级与一键回滚:容器逐步升级与回滚;

-

私密配置文件管理

2.k8s的安装方式

| 安装方式 | 说明 |

|---|---|

| YUM安装 | 安装简单,适合学习 |

| 源码编译安装 | 难度较高,可以安装最新版本 |

| 二进制安装 | 步骤繁琐,可以安装最新版本 |

| kubeadm | 安装简单,可以安装最新版本 |

| minikube | 适合开发人员体验k8s |

3.应用场景

- 微服务

二、Kubernetes集群的安装

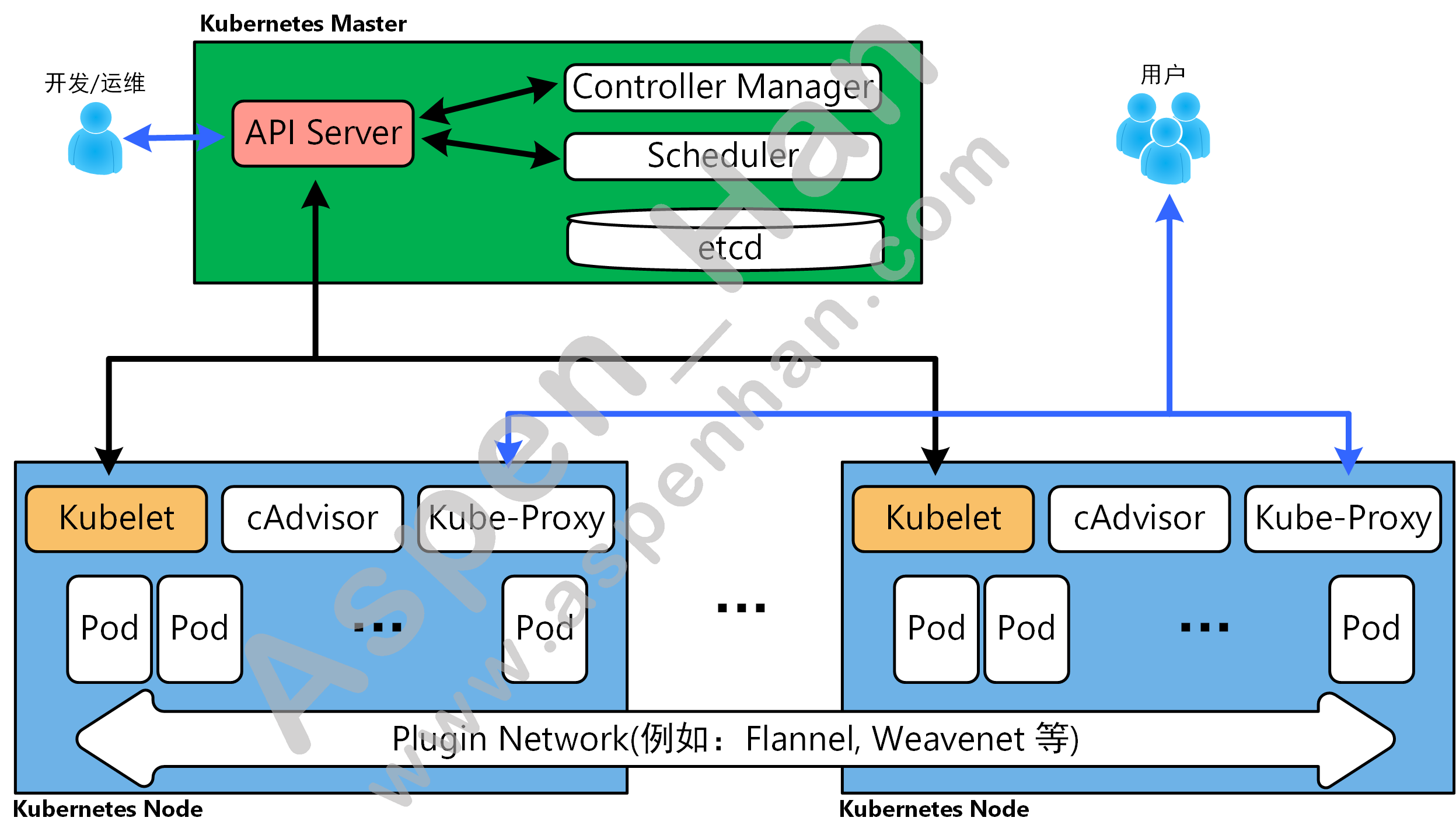

1.k8s的架构

核心组件

-

API Server : k8s集群核心组件,主要为运维人员提供k8s集群的管理入口;

-

Controller Manager : 用于监控容器状态,实现k8s集群自愈功能;

-

Scheduler : k8s集群调度器,用于调度宿主机资源创建容器;

-

etcd : k8s集群数据库,用于存储容器信息;

-

Kubelet :管理插件,用于kubernetes master节点管理node节点资源;

kubelet依赖docker管理容器,不同的版本的docker需要不同版本的kubelet管理;

- Kube-proxy : 用于实现k8s集群负载均衡和反向代理;

- Pod : 用于封装docker容器;

- cAdvisor : 容器监控组件(集成于Kubelet组件中);

附加组件

| k8s附加组件全部由容器实现。 |

| 组件名称 | 说明 |

|---|---|

| kube-dns | 负责为整个集群提供DNS服务 |

| Ingress Controller | 为服务提供外网入口 |

| Heapster | 提供资源监控 |

| Dashboard | 提供GUI |

| Federation | 提供跨可用区的集群 |

| Fluented-elasticsearch | 提供集群日志采集、存储与查询 |

2.Master节点安装

Master节点和Node节点都需要进行hosts解析

step 1 安装etcd数据库服务

etcd是一款key:value类型数据库,该数据库原生支持集群功能

| yum install -y etcd |

[root@k8s-master ~]# yum install -y etcd.x86_64

Loaded plugins: fastestmirror

Determining fastest mirrors

......

Installed:

etcd.x86_64 0:3.3.11-2.el7.centos

Complete!step 2 配置etcd

|

#/etc/etcd/etcd.conf ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379" ETCD_ADVERTISE_CLIENT_URLS="http://IP地址:2379" #默认使用HTTP协议访问 |

[root@k8s-master ~]# grep -Ev '^$|^#' /etc/etcd/etcd.conf

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

ETCD_NAME="default"

ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.110:2379"step 3 启动etcd服务

|

systemctl restart etcd etcdctl -C http://IP地址:2379 cluster-health #etcd数据库健康状态检查 |

[root@k8s-master ~]# systemctl enable etcd.service

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.

[root@k8s-master ~]# systemctl start etcd.service

[root@k8s-master ~]# etcdctl -C http://10.0.0.110:2379 cluster-health

member 8e9e05c52164694d is healthy: got healthy result from http://10.0.0.110:2379

cluster is healthystep 4 安装kubernetes-master

kubernetes-master安装中包含API Server, Controller Manager, Scheduler三项服务

| yum install -y kubernetes-master.x86_64 |

[root@k8s-master ~]# yum install -y kubernetes-master.x86_64

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

......

Installed:

kubernetes-master.x86_64 0:1.5.2-0.7.git269f928.el7

Dependency Installed:

kubernetes-client.x86_64 0:1.5.2-0.7.git269f928.el7

Complete!step 5 配置API Server

|

#/etc/kubernetes/apiserver KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0" #指定API Server监听地址 KUBE_API_PORT="--port=端口号" #指定API Server监听端口,默认为8080 KUBELET_PORT="--kubelet-port=端口号" #指定Kubelet服务管理端口,默认为10250 KUBE_ETCD_SERVERS="--etcd-servers=http://IP地址:2379" #指定etcd服务管理地址和端口 KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=IP地址/掩码" #指定负载均衡vip地址的范围 KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota" |

[root@k8s-master ~]# grep -Ev '^#|^$' /etc/kubernetes/apiserver

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

KUBE_API_PORT="--port=10000"

KUBELET_PORT="--kubelet-port=10250"

KUBE_ETCD_SERVERS="--etcd-servers=http://10.0.0.110:2379"

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.100.0.0/16"

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

KUBE_API_ARGS=""step 6 配置kubernetes

Controller Manager服务和Scheduler服务共用该配置文件

|

#/etc/kubernetes/config KUBE_MASTER="--master=http://IP地址:端口" #指定API Server地址 |

[root@k8s-master ~]# grep -Ev '^#|^$' /etc/kubernetes/config | tail -1

KUBE_MASTER="--master=http://10.0.0.110:10000"step 7 启动API Server服务、Controller Manager服务和Scheduler服务

API Server必须最先启动

|

systemctl restart kube-apiserver.service systemctl restart kube-controller-manager.service systemctl restart kube-scheduler.service |

[root@k8s-master ~]# systemctl enable kube-apiserver.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.

[root@k8s-master ~]# systemctl start kube-apiserver.service

[root@k8s-master ~]# systemctl enable kube-controller-manager.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.

[root@k8s-master ~]# systemctl start kube-controller-manager.service

[root@k8s-master ~]# systemctl enable kube-scheduler.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

[root@k8s-master ~]# systemctl start kube-scheduler.servicestep 8 测试master节点组件

| kubectl get componentstatus |

[root@k8s-master ~]# kubectl -s http://10.0.0.110:10000 get componentstatus

NAME STATUS MESSAGE ERROR

etcd-0 Healthy {"health":"true"}

scheduler Healthy ok

controller-manager Healthy ok

[root@k8s-master ~]# kubectl -s http://10.0.0.110:10000 get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}3.Node节点安装

step 1 安装kubernetes-node

安装kubernetes-node会自动安装docker(依赖)

| yum install -y kubernetes-node.x86_64 |

[root@k8s-node01 ~]# yum install -y kubernetes-node.x86_64

Loaded plugins: fastestmirror

Determining fastest mirrors

......

Complete!step 2 配置kubernetes

Kube-Proxy服务使用该配置文件

|

#/etc/kubernetes/config KUBE_MASTER="--master=http://IP地址:端口" #指定API Server的地址与端口 |

[root@k8s-node01 ~]# tail -1 /etc/kubernetes/config

KUBE_MASTER="--master=http://10.0.0.110:10000"step 3 配置kubelet

|

#/etc/kubernetes/kubelet KUBELET_ADDRESS="--address=0.0.0.0" KUBELET_PORT="--port=端口号" #指定Kubelet服务端口,默认为10250 KUBELET_HOSTNAME="--hostname-override=名称" #指定节点名称,节点名称必须唯一(建议使用节点IP地址作为节点名称) KUBELET_API_SERVER="--api-servers=http://IP地址:端口" #指定API Server的地址与端口 |

[root@k8s-node01 ~]# grep -Ev '^$|^#' /etc/kubernetes/kubelet

KUBELET_ADDRESS="--address=0.0.0.0"

KUBELET_PORT="--port=10250"

KUBELET_HOSTNAME="--hostname-override=10.0.0.120"

KUBELET_API_SERVER="--api-servers=http://10.0.0.110:10000"

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

KUBELET_ARGS=""step 4 启动Kubelet服务和Kube-Proxy服务

启动kuberlet服务时会自动启动docker;

|

systemctl start kubelet.service systemctl start kube-proxy.service |

[root@k8s-node01 ~]# systemctl enable kubelet.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@k8s-node01 ~]# systemctl start kubelet.service

[root@k8s-node01 ~]# systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; disabled; vendor preset: disabled)

Active: active (running) since Wed 2020-07-15 13:15:21 CST; 20s ago

......

[root@k8s-node01 ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@k8s-node01 ~]# systemctl enable kube-proxy.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

[root@k8s-node01 ~]# systemctl start kube-proxy.service step 5 测试node节点

| kubectl get nodes |

[root@k8s-master ~]# kubectl -s http://10.0.0.110:10000 get node

NAME STATUS AGE

10.0.0.120 Ready 3m

10.0.0.130 Ready 1m4.配置k8s集群网络(flannel)

k8s集群中所有业务节点(包含管理节点和业务节点)都需要配置flannel网络;

| flannel启动后自动生成docker配置文件;当docker服务重启时,自动加载并使用flannel网络。 |

step 1 安装flannel网络

| yum install -y flannel |

Master节点安装flannel网络是为了以后操作k8s资源时,ping通容器IP地址。

[root@k8s-master ~]# yum install -y flannel

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

......

Installed:

flannel.x86_64 0:0.7.1-4.el7

Complete![root@k8s-node01 ~]# yum install -y flannel

Loaded plugins: fastestmirror, product-id, search-disabled-repos, subscription-manager

This system is not registered with an entitlement server. You can use subscription-manager to register.

......

Installed:

flannel.x86_64 0:0.7.1-4.el7

Complete!step 2 配置flannel

etcd地址配置错误,会直接导致flannel启动失败;

|

#/etc/sysconfig/flanneld FLANNEL_ETCD_ENDPOINTS="http://IP地址:2379" #指定etcd数据库服务地址及端口 FLANNEL_ETCD_PREFIX="名称" #指定flannel存入键值前缀名称 |

[root@k8s-node01 ~]# grep -Ev '^$|^#' /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://10.0.0.110:2379"

FLANNEL_ETCD_PREFIX="/aspenhan.com/network"step 3 设置网络地址范围

|

#master节点 etcdctl mk /前缀名称/config '{ "Network": "172.18.0.0/16" }' |

[root@k8s-master ~]# etcdctl mk /aspenhan.com/network/config '{"Network":"192.168.0.0/16"}'

{"Network":"192.168.0.0/16"}step 4 修改docker启动脚本

| 当业务节点使用启动k8s的时候,会改变宿主机的iptables规则,造成容器间无法互通。 |

|

#/usr/lib/systemd/system/docker.service ExecStartPost=/usr/sbin/ iptables -P FORWARD ACCEPT |

| systemctl daemon-reload |

# 默认情况

[root@k8s-master ~]# iptables -L -n | grep forward -i

Chain FORWARD (policy ACCEPT)[root@k8s-node01 ~]# iptables -L -n | grep -i forward

Chain FORWARD (policy DROP)

[root@k8s-node01 ~]# systemctl status docker | head -2

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled)

[root@k8s-node01 ~]# grep Exec /usr/lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd-current \

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

ExecReload=/bin/kill -s HUP $MAINPID

[root@k8s-node01 ~]# systemctl daemon-reload step 5 重启各项服务

|

#master节点 systemctl restart flanneld.service systemctl restart kube-apiserver.service systemctl restart kube-controller-manager.service systemctl restart kube-scheduler.service #node节点 systemctl restart flanneld.service systemctl restart docker systemctl restart kubelet.service systemctl restart kube-proxy.service |

[root@k8s-master ~]# systemctl restart kube-apiserver.service

[root@k8s-master ~]# systemctl restart kube-controller-manager.service

[root@k8s-master ~]# systemctl restart kube-scheduler.service [root@k8s-node01 ~]# systemctl enable flanneld.service

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

Created symlink from /etc/systemd/system/docker.service.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

[root@k8s-node01 ~]# systemctl restart flanneld.service

[root@k8s-node01 ~]# systemctl restart docker

[root@k8s-node01 ~]# systemctl restart kubelet.service

[root@k8s-node01 ~]# systemctl restart kube-proxy.service

[root@k8s-node01 ~]# systemctl status docker.service | sed -n 3,4p

Drop-In: /usr/lib/systemd/system/docker.service.d

└─flannel.conf

[root@k8s-node01 ~]# ls /usr/lib/systemd/system/docker.service.d/flannel.conf

/usr/lib/systemd/system/docker.service.d/flannel.conf

[root@k8s-node01 ~]# cat /usr/lib/systemd/system/docker.service.d/flannel.conf

[Service]

EnvironmentFile=-/run/flannel/docker

[root@k8s-node2 ~]# cat /run/flannel/docker

DOCKER_OPT_BIP="--bip=192.168.73.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=true"

DOCKER_OPT_MTU="--mtu=1472"

DOCKER_NETWORK_OPTIONS=" --bip=192.168.73.1/24 --ip-masq=true --mtu=1472"

[root@k8s-node01 ~]# iptables -L -n | grep forward -i

Chain FORWARD (policy ACCEPT)5.配置私有仓库

step 1 安装docker

| yum install -y docker |

[root@k8s-registry ~]# yum install -y docker

Loaded plugins: fastestmirror

Determining fastest mirrors

......

Complete!step 2 配置仓库信任

k8s集群各个业务节点都需要配置仓库信任

|

#/etc/docker/daemon.json {

"registry-mirrors" : ["https://registry.docker-cn.com"], #官方仓库加速

"insecure-registries" : ["仓库地址:端口"] #信任私有仓库

} |

[root@k8s-node01 ~]# cat /etc/docker/daemon.json

{

"registry-mirrors" : ["https://registry.docker-cn.com"],

"insecure-registries" : ["10.0.0.140:5000"]

}

[root@k8s-node01 ~]# systemctl restart dockerstep 3 修改docker启动脚本

|

#/usr/lib/systemd/system/docker.service ExecStartPost=/usr/sbin/ iptables -P FORWARD ACCEPT |

| systemctl daemon-reload |

[root@k8s-registry ~]# grep 'ExecStart' /usr/lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd-current \

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

[root@k8s-registry ~]# systemctl daemon-reload step 4 启动docker

| systemctl restart docker |

[root@k8s-registry ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@k8s-registry ~]# systemctl restart dockerstep 5 启动仓库

| docker run -d -p 宿主机端口:容器端口 --name 容器名称 -v 宿主机目录:容器目录 镜像名称:版本 #启动仓库 |

[root@k8s-registry ~]# docker run -d -p 5000:5000 --restart=always --name registry -v /opt/docker/registry:/var/lib/registry registry

Unable to find image 'registry:latest' locally

Trying to pull repository docker.io/library/registry ...

......

254f479a25d51b681a964678cb563f22400e09ebe05a786c2c86127600c12f21

[root@k8s-registry ~]# docker ps -al

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

254f479a25d5 registry "/entrypoint.sh /e..." 10 seconds ago Up 10 seconds 0.0.0.0:5000->5000/tcp registry测试仓库

|

docker load -i 镜像包 docker tag 镜像名称:版本 仓库地址:端口/镜像名称:版本 docker push 仓库地址:端口/镜像名称:版本 |

[root@k8s-node01 ~]# ls

anaconda-ks.cfg docker_alpine.tar.gz

[root@k8s-node01 ~]# docker load -i docker_alpine.tar.gz

1bfeebd65323: Loading layer 5.844 MB/5.844 MB

Loaded image: alpine:latest

[root@k8s-node01 ~]# docker tag alpine:latest 10.0.0.140:5000/alpine:latest

[root@k8s-node01 ~]# docker push 10.0.0.140:5000/alpine

The push refers to a repository [10.0.0.140:5000/alpine]

1bfeebd65323: Pushed

latest: digest: sha256:57334c50959f26ce1ee025d08f136c2292c128f84e7b229d1b0da5dac89e9866 size: 528[root@k8s-registry ~]# ls /opt/docker/registry/docker/registry/v2/repositories/

alpine[root@k8s-node2 ~]# docker pull 10.0.0.140:5000/alpine:latest

Trying to pull repository 10.0.0.140:5000/alpine ...

latest: Pulling from 10.0.0.140:5000/alpine

050382585609: Pull complete

Digest: sha256:57334c50959f26ce1ee025d08f136c2292c128f84e7b229d1b0da5dac89e9866

Status: Downloaded newer image for 10.0.0.140:5000/alpine:latest

[root@k8s-node2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

10.0.0.140:5000/alpine latest b7b28af77ffe 12 months ago 5.58 MB