|

任何的k8s资源都可以由yaml文件定义。 同一类型的资源,资源名称必须唯一,不能重复。 |

yaml文件的主要组成

|

apiVersion: v1 #指定api版本 kind: 类型 #指定资源类型 metadata: #指定资源属性 spec: #配置资源详细情况 |

yaml文件的基本操作

|

kubectl create -f 资源yaml文件 #创建资源 kubectl delete -f 资源yaml文件 #删除资源 kubectl delete 资源类型 资源名称 #删除资源 kubectl get 资源类型 #查看资源列表 kubectl describe 资源类型 资源名称 #查看资源详细信息 kubectl edit 资源类型 资源名称 #修改资源属性 kubectl scale 资源类型 资源名称 --参数 #修改资源属性 |

一、 Pod资源

在k8s中Pod是最小的资源单位,Pod资源至少由2个容器(基础容器+业务容器)组成

1. 创建Pod资源

|

apiVersion: v1 kind: Pod metadata:

name: 名称 #指定资源名称

labels:

app: 名称

spec:

containers:

- name: 名称

image: 镜像仓库:端口/镜像名称:版本 #指定容器镜像

command: ["指令","参数"] #指定容器启动初始命令

ports:

- containerPort: 端口

|

[root@k8s-registry ~]# docker load -i docker_nginx1.13.tar.gz

d626a8ad97a1: Loading layer 58.46 MB/58.46 MB

82b81d779f83: Loading layer 54.21 MB/54.21 MB

7ab428981537: Loading layer 3.584 kB/3.584 kB

Loaded image: docker.io/nginx:1.13

[root@k8s-registry ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/registry latest 2d4f4b5309b1 4 months ago 26.2 MB

docker.io/nginx 1.13 ae513a47849c 2 years ago 109 MB

[root@k8s-registry ~]# docker tag docker.io/nginx:1.13 10.0.0.140:5000/nginx:1.13

[root@k8s-registry ~]# docker push 10.0.0.140:5000/nginx:1.13

The push refers to a repository [10.0.0.140:5000/nginx]

7ab428981537: Pushed

82b81d779f83: Pushed

d626a8ad97a1: Pushed

1.13: digest: sha256:e4f0474a75c510f40b37b6b7dc2516241ffa8bde5a442bde3d372c9519c84d90 size: 948[root@k8s-master ~/k8s_yaml/Pod]# cat k8s_pod_nginx.yml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.140:5000/nginx:1.13

ports:

- containerPort: 80[root@k8s-master ~]# mkdir -p k8s_yaml/Pod

[root@k8s-master ~]# cd k8s_yaml/Pod/

[root@k8s-master ~/k8s_yaml/Pod]# kubectl -s http://10.0.0.110:10000 create -f k8s_pod_nginx.yml

pod "nginx" created

[root@k8s-master ~/k8s_yaml/Pod]# kubectl -s http://10.0.0.110:10000 get pod

NAME READY STATUS RESTARTS AGE

nginx 0/1 ContainerCreating 0 1m2. 启用Pod资源

| kubectl describe pod 资源名称 #查看POD资源的详细信息 |

[root@k8s-master ~/k8s_yaml/Pod]# kubectl -s http://10.0.0.110:10000 describe pod nginx

Name: nginx

Namespace: default

Node: 10.0.0.130/10.0.0.130

Start Time: Wed, 11 Nov 2020 14:58:27 +0800

......

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

1m 1m 1 {default-scheduler } Normal ScheduleSuccessfully assigned nginx to 10.0.0.130

1m 20s 4 {kubelet 10.0.0.130} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"registry.access.redhat.com/rhel7/pod-infrastructure:latest\""

1m 7s 4 {kubelet 10.0.0.130} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"| 所有业务节点都需要加载pod-infrastructure镜像,并重启kubelet服务。 |

|

docker load -i pod-infrastructure-latest.tar.gz docker tag docker.io/tianyebj/pod-infrastructure:latest 仓库地址:端口/pod-infrastructure:latest docker push 仓库地址:端口/pod-infrastructure:latest |

[root@k8s-registry ~]# docker search pod-infrastructure

INDEX NAME DESCRIPTION STARS OFFICIAL AUTOMATED

docker.io docker.io/tianyebj/pod-infrastructure registry.access.redhat.com/rhel7/pod-infra... 2

docker.io docker.io/w564791/pod-infrastructure latest 1

docker.io docker.io/xiaotech/pod-infrastructure registry.access.redhat.com/rhel7/pod-infra... 1 [OK]

docker.io docker.io/092800/pod-infrastructure 0

......[root@k8s-registry ~]# docker pull docker.io/tianyebj/pod-infrastructure

Using default tag: latest

Trying to pull repository docker.io/tianyebj/pod-infrastructure ...

latest: Pulling from docker.io/tianyebj/pod-infrastructure

7bd78273b666: Pull complete

c196631bd9ac: Pull complete

3c917e6a9e1a: Pull complete

Digest: sha256:73cc48728e707b74f99d17b4e802d836e22d373aee901fdcaa781b056cdabf5c

Status: Downloaded newer image for docker.io/tianyebj/pod-infrastructure:latest

[root@k8s-registry ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/registry latest 2d4f4b5309b1 4 months ago 26.2 MB

docker.io/nginx 1.13 ae513a47849c 2 years ago 109 MB

docker.io/tianyebj/pod-infrastructure latest 34d3450d733b 3 years ago 205 MB

[root@k8s-registry ~]# docker tag docker.io/tianyebj/pod-infrastructure:latest 10.0.0.140:5000/pod-infrastructure:latest

[root@k8s-registry ~]# docker push 10.0.0.140:5000/pod-infrastructure:latest

The push refers to a repository [10.0.0.140:5000/pod-infrastructure]

ba3d4cbbb261: Pushed

0a081b45cb84: Pushed

df9d2808b9a9: Pushed

latest: digest: sha256:a378b2d7a92231ffb07fdd9dbd2a52c3c439f19c8d675a0d8d9ab74950b15a1b size: 948|

#/etc/kubernetes/kubelet KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image="私有仓库地址pod-infrastructure:latest" |

[root@k8s-node01 ~]# sed -n 16,17p /etc/kubernetes/kubelet

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=10.0.0.140:5000/pod-infrastructure:latest"[root@k8s-node2 ~]# sed -n 16,17p /etc/kubernetes/kubelet

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=10.0.0.140:5000/pod-infrastructure:latest"| systemctl restart kubelet.service |

[root@k8s-node01 ~]# systemctl restart kubelet[root@k8s-node2 ~]# systemctl restart kubelet.service3. 查看Pod资源

|

kubectl get pod #查看资源列表 kubectl describe pod 资源名称 #查看资源详细信息 |

[root@k8s-master ~/k8s_yaml/Pod]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 1 1h 192.168.23.2 10.0.0.130

[root@k8s-master ~/k8s_yaml/Pod]# ping 192.168.23.2 -c 1

PING 192.168.23.2 (192.168.23.2) 56(84) bytes of data.

64 bytes from 192.168.23.2: icmp_seq=1 ttl=61 time=1.10 ms

--- 192.168.23.2 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 1.104/1.104/1.104/0.000 ms

[root@k8s-master ~/k8s_yaml/Pod]# kubectl -s http://10.0.0.110:10000 describe pod nginx

Name: nginx

Namespace: default

Node: 10.0.0.130/10.0.0.130

......

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

23m 23m 1 {default-scheduler } Normal ScheduleSuccessfully assigned nginx to 10.0.0.130

23m 17m 6 {kubelet 10.0.0.130} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ErrImagePull: "image pull failed for registry.access.redhat.com/rhel7/pod-infrastructure:latest, this may be because there are no credentials on this request. details: (open /etc/docker/certs.d/registry.access.redhat.com/redhat-ca.crt: no such file or directory)"

22m 12m 45 {kubelet 10.0.0.130} Warning FailedSync Error syncing pod, skipping: failed to "StartContainer" for "POD" with ImagePullBackOff: "Back-off pulling image \"registry.access.redhat.com/rhel7/pod-infrastructure:latest\""

......

7m 7m 1 {kubelet 10.0.0.130} spec.containers{nginx} Normal Started Started container with docker id 11bb485dbbb9| 业务容器与 基础容器共用网络 |

[root@k8s-node2 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

bc811491af33 10.0.0.140:5000/nginx:1.13 "nginx -g 'daemon ..." 41 minutes ago Up 41 minutes k8s_nginx.2a6903c3_nginx_default_4cae0398-23eb-11eb-85ee-000c29870b5c_5ac5ce48

dab8d82743ce 10.0.0.140:5000/pod-infrastructure:latest "/pod" 41 minutes ago Up 41 minutes k8s_POD.ae6001e3_nginx_default_4cae0398-23eb-11eb-85ee-000c29870b5c_8a6cadc0

[root@k8s-node2 ~]# docker inspect bc811491af33 |grep -i network

"NetworkMode": "container:dab8d82743ce47728242eae32fa3bb303526587732bf2e76612d9c1b77e03839",

"NetworkSettings": {

"Networks": {}

[root@k8s-node2 ~]# docker inspect dab8d82743ce | grep -i network

"NetworkMode": "default",

"NetworkSettings": {

"Networks": {

"NetworkID": "59a051c5f58dd15f595e90c0bb5cf239c0d9d49d499a877bd51a7ffe4edfa87a",

[root@k8s-node2 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

59a051c5f58d bridge bridge local

203eb2b4037e host host local

4d59e4f80e19 none null local二、 ReplicationController资源

ReplicationController资源:保证始终存在指定数量的Pod存活,rc通过标签选择器来关联Pod。

1. 创建ReplicationController资源

|

apiVersion: v1 kind: ReplicationController metadata:

name: 名称 #指定资源名称

spec:

replicas: n #指定副本数量

selector: #指定标签选择器

app: 名称

template: #指定Pod模板

metadata:

labels:

app: 名称

spec:

containers:

- name: 名称

image: 镜像仓库:端口/镜像名称:版本

command: ["指令","参数"]

ports:

- containerPort: 端口

|

[root@k8s-master ~]# mkdir k8s_yaml/rc

[root@k8s-master ~]# cd k8s_yaml/rc[root@k8s-master ~/k8s_yaml/rc]# cat k8s_rc_nginx.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx

spec:

replicas: 7

selector:

app: aspen

template:

metadata:

labels:

app: aspen

spec:

containers:

- name: nginx

image: 10.0.0.140:5000/nginx:1.13

ports:

- containerPort: 80[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 create -f k8s_rc_nginx.yml

replicationcontroller "nginx" created

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 get rc

NAME DESIRED CURRENT READY AGE

nginx 7 7 7 6m

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 get pod -o wideNAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 3 20h 192.168.23.2 10.0.0.130

nginx-00j6n 1/1 Running 0 6m 192.168.23.3 10.0.0.130

nginx-814d4 1/1 Running 0 6m 192.168.23.5 10.0.0.130

nginx-838tl 1/1 Running 0 6m 192.168.23.4 10.0.0.130

nginx-gldjz 1/1 Running 0 6m 192.168.3.2 10.0.0.120

nginx-lhx68 1/1 Running 0 6m 192.168.3.5 10.0.0.120

nginx-s2f9j 1/1 Running 0 6m 192.168.3.3 10.0.0.120

nginx-x1w1f 1/1 Running 0 6m 192.168.3.4 10.0.0.1202. 测试RepicationController

|

kubectl delete pod 资源名称 #删除指定Pod资源 kubectl get pod -o wide |

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 delete pod nginx-00j6n

pod "nginx-00j6n" deleted

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 3 20h 192.168.23.2 10.0.0.130

nginx-814d4 1/1 Running 0 9m 192.168.23.5 10.0.0.130

nginx-838tl 1/1 Running 0 9m 192.168.23.4 10.0.0.130

nginx-gldjz 1/1 Running 0 9m 192.168.3.2 10.0.0.120

nginx-lhx68 1/1 Running 0 9m 192.168.3.5 10.0.0.120

nginx-nq936 1/1 Running 0 17s 192.168.23.6 10.0.0.130

nginx-s2f9j 1/1 Running 0 9m 192.168.3.3 10.0.0.120

nginx-x1w1f 1/1 Running 0 9m 192.168.3.4 10.0.0.120

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 get rc -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

nginx 7 7 7 10m nginx 10.0.0.140:5000/nginx:1.13 app=aspen|

systemctl stop kubelet.service kubectl delete node 节点名称 #删除指定节点 kubectl get pod -o wide |

在实际环境中,利用监控将检测到NotReady的节点删除,然后进行故障节点恢复,最后重启节点加入集群

[root@k8s-node01 ~]# systemctl stop kubelet.service [root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 get node

NAME STATUS AGE

10.0.0.120 NotReady 119d

10.0.0.130 Ready 119d

[root@k8s-master ~/k8s_yaml/rc]# kubectl delete node `kubectl -s http://10.0.0.110:10000 get node| awk '/NotReady/ {print $1}'` -s http://10.0.0.110:10000

node "10.0.0.120" deleted

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 3 20h 192.168.23.2 10.0.0.130

nginx-7fchq 1/1 Running 0 6m 192.168.23.9 10.0.0.130

nginx-814d4 1/1 Running 0 22m 192.168.23.5 10.0.0.130

nginx-838tl 1/1 Running 0 22m 192.168.23.4 10.0.0.130

nginx-hz9bx 1/1 Running 0 6m 192.168.23.8 10.0.0.130

nginx-nd69t 1/1 Running 0 6m 192.168.23.7 10.0.0.130

nginx-nq936 1/1 Running 0 12m 192.168.23.6 10.0.0.130

nginx-sfjf8 1/1 Running 0 6m 192.168.23.3 10.0.0.130| 当Pod数量多于rc指定的副本数量时,k8s会自动结束生命周期最短的Pod资源。 |

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx 1/1 Running 4 20h app=web

nginx-7fchq 1/1 Running 1 11m app=aspen

nginx-814d4 1/1 Running 1 27m app=aspen

nginx-838tl 1/1 Running 1 27m app=aspen

nginx-hz9bx 1/1 Running 1 11m app=aspen

nginx-nd69t 1/1 Running 1 11m app=aspen

nginx-nq936 1/1 Running 1 17m app=aspen

nginx-sfjf8 1/1 Running 1 11m app=aspen

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 get rc -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

nginx 7 7 7 28m nginx 10.0.0.140:5000/nginx:1.13 app=aspen

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 delete pod nginx-sfjf8

pod "nginx-sfjf8" deleted

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 4 20h 192.168.23.8 10.0.0.130

nginx-7fchq 1/1 Running 1 21m 192.168.23.2 10.0.0.130

nginx-814d4 1/1 Running 1 37m 192.168.23.6 10.0.0.130

nginx-838tl 1/1 Running 1 37m 192.168.23.7 10.0.0.130

nginx-hz9bx 1/1 Running 1 21m 192.168.23.3 10.0.0.130

nginx-nd69t 1/1 Running 1 21m 192.168.23.4 10.0.0.130

nginx-nq936 1/1 Running 1 27m 192.168.23.5 10.0.0.130

nginx-vkgcw 1/1 Running 0 5s 192.168.3.2 10.0.0.120

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 edit pod nginx

......

labels:

app: aspen

name: nginx

......

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 4 20h 192.168.23.8 10.0.0.130

nginx-7fchq 1/1 Running 1 21m 192.168.23.2 10.0.0.130

nginx-814d4 1/1 Running 1 38m 192.168.23.6 10.0.0.130

nginx-838tl 1/1 Running 1 38m 192.168.23.7 10.0.0.130

nginx-hz9bx 1/1 Running 1 21m 192.168.23.3 10.0.0.130

nginx-nd69t 1/1 Running 1 21m 192.168.23.4 10.0.0.130

nginx-nq936 1/1 Running 1 28m 192.168.23.5 10.0.0.1303. 基于ReplicationController的滚动升级与一键回滚

| 利用rc滚动升级时,需要保留原文件和升级文件,便于升级报错后,可以及时回滚。 |

|

kubectl rolling-update rc资源名称 -f 资源yaml文件 #滚动升级rc资源

--update-period=时间 #指定更新间隔时间(默认1分钟)

|

[root@k8s-registry ~]# rz -E

rz waiting to receive.

[root@k8s-registry ~]# docker load -i docker_nginx1.15.tar.gz

8b15606a9e3e: Loading layer 58.44 MB/58.44 MB

94ad191a291b: Loading layer 54.35 MB/54.35 MB

92b86b4e7957: Loading layer 3.584 kB/3.584 kB

Loaded image: docker.io/nginx:latest

[root@k8s-registry ~]# docker tag docker.io/nginx:latest 10.0.0.140:5000/nginx:1.15

[root@k8s-registry ~]# docker push 10.0.0.140:5000/nginx:1.15

The push refers to a repository [10.0.0.140:5000/nginx]

92b86b4e7957: Pushed

94ad191a291b: Pushed

8b15606a9e3e: Pushed

1.15: digest: sha256:204a9a8e65061b10b92ad361dd6f406248404fe60efd5d6a8f2595f18bb37aad size: 948[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 4 22h 192.168.23.8 10.0.0.130

nginx-7fchq 1/1 Running 1 2h 192.168.23.2 10.0.0.130

nginx-814d4 1/1 Running 1 2h 192.168.23.6 10.0.0.130

nginx-838tl 1/1 Running 1 2h 192.168.23.7 10.0.0.130

nginx-hz9bx 1/1 Running 1 2h 192.168.23.3 10.0.0.130

nginx-nd69t 1/1 Running 1 2h 192.168.23.4 10.0.0.130

nginx-nq936 1/1 Running 1 2h 192.168.23.5 10.0.0.130

[root@k8s-master ~/k8s_yaml/rc]# curl -sI 192.168.23.7 | awk NR==2

Server: nginx/1.13.12

[root@k8s-master ~/k8s_yaml/rc]# cp k8s_rc_nginx.yml k8s_rc_nginx_update.yml #准备配置文件(新配置文件)

[root@k8s-master ~/k8s_yaml/rc]# cat k8s_rc_nginx_update.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginxupdate

spec:

replicas: 3

selector:

app: young

template:

metadata:

labels:

app: young

spec:

containers:

- name: nginxupdate

image: 10.0.0.140:5000/nginx:1.15

ports:

- containerPort: 80

#滚动升级

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 rolling-update nginx -f k8s_rc_nginx_update.yml --update-period=30s

Created nginxupdate

Scaling up nginxupdate from 0 to 3, scaling down nginx from 7 to 0 (keep 3 pods available, don't exceed 4 pods)

Scaling nginx down to 3

Scaling nginxupdate up to 1

Scaling nginx down to 2

Scaling nginxupdate up to 2

Scaling nginx down to 1

Scaling nginxupdate up to 3

Scaling nginx down to 0

Update succeeded. Deleting nginx

replicationcontroller "nginx" rolling updated to "nginxupdate"

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginxupdate-8gr14 1/1 Running 0 2m 192.168.3.2 10.0.0.120

nginxupdate-9lvjt 1/1 Running 0 1m 192.168.3.3 10.0.0.120

nginxupdate-ffrml 1/1 Running 0 2m 192.168.23.2 10.0.0.130

[root@k8s-master ~/k8s_yaml/rc]# curl -sI 192.168.23.2 | awk NR==2

Server: nginx/1.15.5[root@k8s-master ~]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-814d4 1/1 Running 1 3h 192.168.23.6 10.0.0.130

nginx-838tl 1/1 Running 1 3h 192.168.23.7 10.0.0.130

nginx-nq936 1/1 Running 1 2h 192.168.23.5 10.0.0.130

nginxupdate-8gr14 1/1 Running 0 8s 192.168.3.2 10.0.0.120

[root@k8s-master ~]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-814d4 1/1 Running 1 3h 192.168.23.6 10.0.0.130

nginx-838tl 1/1 Running 1 3h 192.168.23.7 10.0.0.130

nginxupdate-8gr14 1/1 Running 0 42s 192.168.3.2 10.0.0.120

nginxupdate-ffrml 1/1 Running 0 12s 192.168.23.2 10.0.0.130

[root@k8s-master ~]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-814d4 1/1 Terminating 1 3h 192.168.23.6 10.0.0.130

nginxupdate-8gr14 1/1 Running 0 1m 192.168.3.2 10.0.0.120

nginxupdate-9lvjt 1/1 Running 0 31s 192.168.3.3 10.0.0.120

nginxupdate-ffrml 1/1 Running 0 1m 192.168.23.2 10.0.0.130#一键回滚

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 rolling-update nginxupdate -f k8s_rc_nginx.yml --update-period=3s

Created nginx

Scaling up nginx from 0 to 7, scaling down nginxupdate from 3 to 0 (keep 7 pods available, don't exceed 8 pods)

Scaling nginx up to 5

Scaling nginxupdate down to 2

Scaling nginx up to 6

Scaling nginxupdate down to 1

Scaling nginx up to 7

Scaling nginxupdate down to 0

Update succeeded. Deleting nginxupdate

replicationcontroller "nginxupdate" rolling updated to "nginx"

[root@k8s-master ~/k8s_yaml/rc]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-49pg0 1/1 Running 0 16s 192.168.3.6 10.0.0.120

nginx-6f17v 1/1 Running 0 20s 192.168.23.3 10.0.0.130

nginx-6hrsf 1/1 Running 0 20s 192.168.23.5 10.0.0.130

nginx-f3x1z 1/1 Running 0 20s 192.168.3.5 10.0.0.120

nginx-g80tp 1/1 Running 0 13s 192.168.3.3 10.0.0.120

nginx-pq311 1/1 Running 0 20s 192.168.23.4 10.0.0.130

nginx-zxh62 1/1 Running 0 20s 192.168.3.4 10.0.0.120

[root@k8s-master ~/k8s_yaml/rc]# curl -sI 192.168.23.3 | awk NR==2

Server: nginx/1.13.12[root@k8s-master ~]# kubectl -s http://10.0.0.110:10000 describe rc nginx

Name: nginx

Namespace: default

Image(s): 10.0.0.140:5000/nginx:1.13

Selector: app=aspen

Labels: app=aspen

......

14m 14m 1 {replication-controller } Normal SuccessfulCreate Created pod: nginx-49pg0

14m 14m 1 {replication-controller } Normal SuccessfulCreate Created pod: nginx-g80tp

[root@k8s-master ~]# kubectl -s http://10.0.0.110:10000 scale rc nginx --replicas=3

replicationcontroller "nginx" scaled

[root@k8s-master ~]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-6f17v 1/1 Running 0 15m 192.168.23.3 10.0.0.130

nginx-f3x1z 1/1 Running 0 15m 192.168.3.5 10.0.0.120

nginx-pq311 1/1 Running 0 15m 192.168.23.4 10.0.0.130三、 Service资源

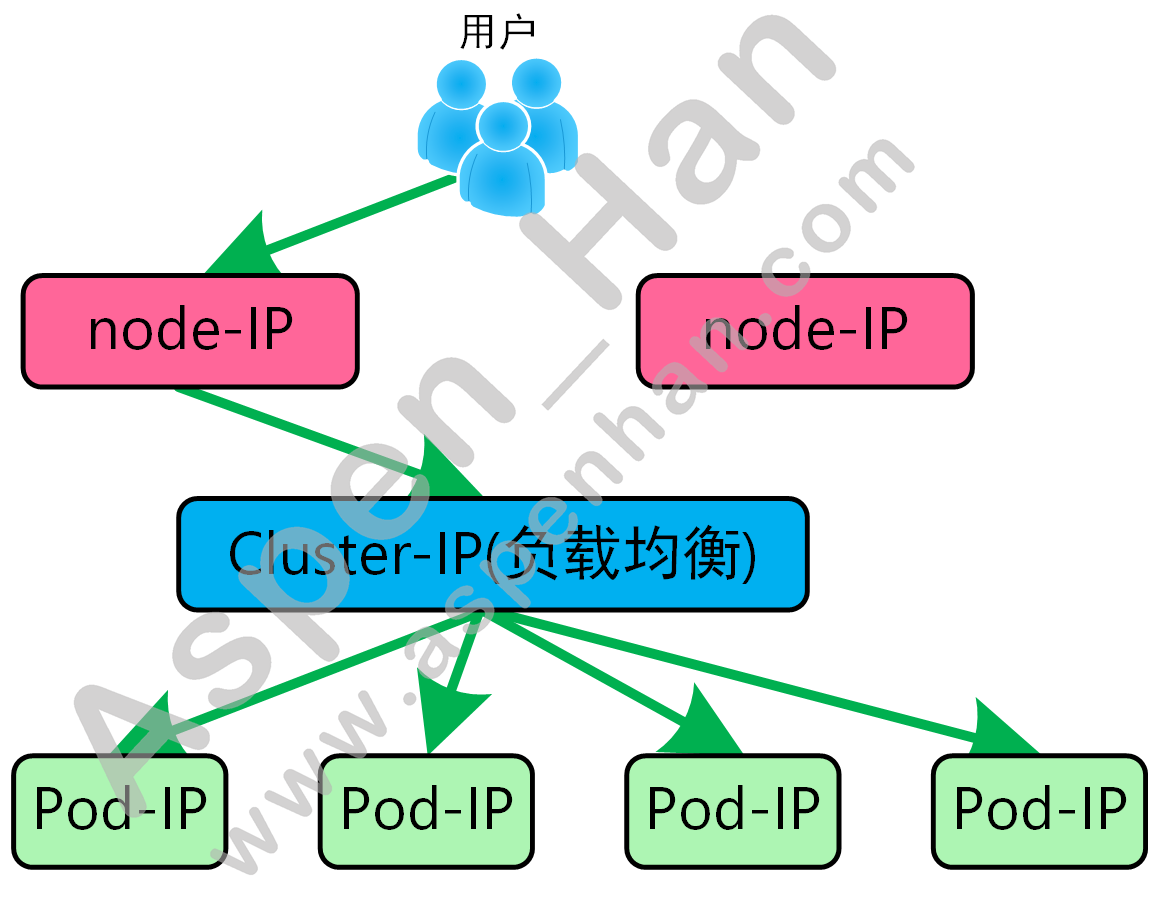

Service资源用于帮助外界访问容器内部的业务并为Pod资源提供负载均衡功能;Service通过标签选择器来关联Pod。

Service资源默认使用iptables实现负载均衡,k8s 1.8新版本中推荐使用Lvs实现负载均衡。

|

不同的svc资源可以使用同一个标签选择器; 但是不能映射同一端口;不能使用同一个名字; |

1. Service资源 IP地址

- node IP

[root@k8s-node01 ~]# ifconfig eth0 | awk 'NR==2 {print $2}'

10.0.0.120[root@k8s-node2 ~]# ifconfig eth0 | awk 'NR==2 {print $2}'

10.0.0.130- Cluster IP

[root@k8s-master ~]# awk '/service/' /etc/kubernetes/apiserver

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.100.0.0/16"- Pod IP

[root@k8s-master ~]# awk '/PREFIX/' /etc/sysconfig/flanneld

FLANNEL_ETCD_PREFIX="/aspenhan.com/network"

[root@k8s-master ~]# awk -F '"' '/PREFIX/ {print $2}' /etc/sysconfig/flanneld

/aspenhan.com/network

[root@k8s-master ~]# etcdctl get `awk -F '"' '/PREFIX/ {print $2}' /etc/sysconfig/flanneld | sed 's#.*#&/config#g'`

{"Network":"192.168.0.0/16"}

2. 创建Service资源(yaml方式)

|

apiVersion: v1 kind: Service metadata:

name: 名称

spec:

type: NodePort

ports:

- port: 端口 #Cluster IP端口

nodePort: 端口 #宿主机端口

targetPort: 端口 #Pod端口

selector:

app: 名称

|

[root@k8s-master ~]# mkdir k8s_yaml/svc

[root@k8s-master ~]# cd k8s_yaml/svc

[root@k8s-master ~/k8s_yaml/svc]# vim k8s_svc_aspen.yml

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

type: NodePort

ports:

- port: 80

nodePort: 30000

targetPort: 80

selector:

app: aspen|

NodePort:宿主机端口映射至Cluster IP; ClusterIP:不进行端口映射,默认为Cluster IP类型; |

[root@k8s-master ~/k8s_yaml/svc]# kubectl -s http://10.0.0.110:10000 create -f k8s_svc_aspen.yml

service "nginx" created

[root@k8s-master ~/k8s_yaml/svc]# kubectl -s http://10.0.0.110:10000 get svc -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes 10.100.0.1 <none> 443/TCP 120d <none>

nginx 10.100.41.182 <nodes> 80:30000/TCP 2m app=aspen

[root@k8s-master ~/k8s_yaml/svc]# kubectl -s http://10.0.0.110:10000 describe svc nginx

Name: nginx

Namespace: default

Labels: <none>

Selector: app=aspen

Type: NodePort

IP: 10.100.41.182

Port: <unset> 80/TCP

NodePort: <unset> 30000/TCP

Endpoints: 192.168.23.3:80,192.168.23.4:80,192.168.3.5:80

Session Affinity: None

No events.

[root@k8s-master ~/k8s_yaml/svc]# kubectl -s http://10.0.0.110:10000 scale rc --replicas=5 nginx

replicationcontroller "nginx" scaled

[root@k8s-master ~/k8s_yaml/svc]# kubectl -s http://10.0.0.110:10000 describe svc nginx

Name: nginx

Namespace: default

Labels: <none>

Selector: app=aspen

Type: NodePort

IP: 10.100.41.182

Port: <unset> 80/TCP

NodePort: <unset> 30000/TCP

Endpoints: 192.168.23.3:80,192.168.23.4:80,192.168.3.2:80 + 2 more...

Session Affinity: None

No events.[root@k8s-node01 ~]# netstat -lntup| grep '30000'

tcp6 0 0 :::30000 :::* LISTEN 8356/kube-proxy [root@k8s-node2 ~]# netstat -lntup| grep '30000'

tcp6 0 0 :::30000 :::* LISTEN 8228/kube-proxy [root@k8s-master ~/k8s_yaml/svc]# curl -sI http://10.0.0.120:30000 | sed -n 2p

Server: nginx/1.13.12

[root@k8s-master ~/k8s_yaml/svc]# curl -sI http://10.0.0.130:30000 | sed -n 2p

Server: nginx/1.13.123. 创建Service资源(shell方式)

nodePort范围:默认范围是30000-32767

[root@k8s-master ~/k8s_yaml/svc]# tail -1 /etc/kubernetes/apiserver

KUBE_API_ARGS=""

[root@k8s-master ~/k8s_yaml/svc]# kubectl create -f k8s_svc_test.yml -s http://10.0.0.110:10000

The Service "nginx" is invalid: spec.ports[0].nodePort: Invalid value: 3000: provided port is not in the valid range. The range of valid ports is 30000-32767

[root@k8s-master ~/k8s_yaml/svc]# tail -1 /etc/kubernetes/apiserver

KUBE_API_ARGS="--service-node-port-range=3000-50000"

[root@k8s-master ~/k8s_yaml/svc]# systemctl restart kube-apiserver.service

[root@k8s-master ~/k8s_yaml/svc]# kubectl create -f k8s_svc_test.yml -s http://10.0.0.110:10000

service "test" created[root@k8s-node01 ~]# netstat -lntup | grep 3000

tcp6 0 0 :::30000 :::* LISTEN 8356/kube-proxy

tcp6 0 0 :::3000 :::* LISTEN 8356/kube-proxy |

kubectl expose 被服务资源类型 资源名称 --name=svc资源名称 --type=NodePort --port=VIP端口 --target-port=Pod端口 #创建service资源; Shell创建svc资源无法指定Node端口。 |

[root@k8s-master ~]# kubectl -s http://10.0.0.110:10000 expose rc nginx --name=test2 --type=NodePort --port=80 --target-port=80

service "test2" exposed

[root@k8s-master ~]# kubectl -s http://10.0.0.110:10000 get svc -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes 10.100.0.1 <none> 443/TCP 121d <none>

nginx 10.100.41.182 <nodes> 80:30000/TCP 22h app=aspen

nginx2 10.100.179.67 <nodes> 80:31244/TCP 21h app=aspen

test 10.100.26.217 <nodes> 80:3000/TCP 20h app=aspen

test2 10.100.109.99 <nodes> 80:3818/TCP 45s app=aspen4. 测试Service资源

| kubectl exec -it Pod名称 初始命令 #进入容器 |

[root@k8s-master ~/k8s_yaml/svc]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-6f17v 1/1 Running 1 1h 192.168.23.3 10.0.0.130

nginx-84ddt 1/1 Running 1 24m 192.168.3.4 10.0.0.120

nginx-b4bcz 1/1 Running 1 24m 192.168.3.3 10.0.0.120

nginx-f3x1z 1/1 Running 1 1h 192.168.3.2 10.0.0.120

nginx-pq311 1/1 Running 1 1h 192.168.23.2 10.0.0.130

[root@k8s-master ~/k8s_yaml/svc]# kubectl -s http://10.0.0.110:10000 exec -it nginx-6f17v /bin/bash

root@nginx-6f17v:/# echo "web01" > /usr/share/nginx/html/index.html

[root@k8s-master ~/k8s_yaml/svc]# kubectl -s http://10.0.0.110:10000 exec -it nginx-84ddt /bin/bash

root@nginx-84ddt:/# echo web02 > /usr/share/nginx/html/index.html

root@nginx-84ddt:/# exit

[root@k8s-master ~/k8s_yaml/svc]# kubectl -s http://10.0.0.110:10000 exec -it nginx-b4bcz /bin/bash

root@nginx-b4bcz:/# echo web03 > /usr/share/nginx/html/index.html

root@nginx-b4bcz:/# exit

[root@k8s-master ~/k8s_yaml/svc]# kubectl -s http://10.0.0.110:10000 exec -it nginx-f3x1z /bin/bash

root@nginx-f3x1z:/# echo web04 > /usr/share/nginx/html/index.html

root@nginx-f3x1z:/# exit

[root@k8s-master ~/k8s_yaml/svc]# kubectl -s http://10.0.0.110:10000 exec -it nginx-pq311 /bin/bash

root@nginx-pq311:/# echo web05 > /usr/share/nginx/html/index.html

root@nginx-pq311:/# exit

[root@k8s-master ~/k8s_yaml/svc]# curl -s http://10.0.0.120:30000

web03

[root@k8s-master ~/k8s_yaml/svc]# curl -s http://10.0.0.120:30000

web04

[root@k8s-master ~/k8s_yaml/svc]# curl -s http://10.0.0.120:30000

web03

[root@k8s-master ~/k8s_yaml/svc]# curl -s http://10.0.0.120:30000

web05

[root@k8s-master ~/k8s_yaml/svc]# curl -s http://10.0.0.120:30000

web02

[root@k8s-master ~/k8s_yaml/svc]# curl -s http://10.0.0.120:30000

web02

[root@k8s-master ~/k8s_yaml/svc]# curl -s http://10.0.0.120:30000

web01四、 Deployment资源

Deployment资源解决了ReplicationController资源在滚动升级中服务访问中断的问题。

1. 创建Deployment资源(yaml方式)

|

apiVersion: extensions/v1beta1 kind: Deployment metadata:

name: 名称

spec:

replicas: n

minReadySeconds: n #滚动升级间隔时间

template:

metadata:

labels:

app: 名称

spec:

containers:

- name: 名称

image: 镜像仓库:端口/镜像名称:版本

command: ["指令","参数"]

ports:

- containerPort: 端口

resources: #对容器进行资源限制

limits: #指定资源上限

cpu: 100m

requests: #指定资源下限

cpu: 100m

|

[root@k8s-master ~]# mkdir -p k8s_yaml/Deployment

[root@k8s-master ~]# cd k8s_yaml/Deployment/

[root@k8s-master ~/k8s_yaml/Deployment]# cat k8s_deploy_nginx.ymlapiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 4

minReadySeconds: 45

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: 10.0.0.140:5000/nginx:1.13

ports:

- containerPort: 80

resources:

limits:

cpu: 100m

requests:

cpu: 100m[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 create -f k8s_deploy_nginx.yml

deployment "nginx" created

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 get deploy

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

nginx 4 4 4 0 35s

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-3790091926-1strc 1/1 Running 0 5m 192.168.7.9 10.0.0.130

nginx-3790091926-6nghj 1/1 Running 0 5m 192.168.7.7 10.0.0.130

nginx-3790091926-pkb7j 1/1 Running 0 5m 192.168.7.8 10.0.0.130

nginx-3790091926-qqh95 1/1 Running 0 5m 192.168.7.10 10.0.0.130

......

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 expose deployment nginx --type=NodePort --port=80 --target-port=80 --name=deploy-nginx

service "deploy-nginx" exposed

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 get svc deploy-nginx -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

deploy-nginx 10.100.91.246 <nodes> 80:12874/TCP 1m app=nginx

[root@k8s-master ~/k8s_yaml/Deployment]# curl -sI 10.0.0.130:12874 | sed -n 1,2p

HTTP/1.1 200 OK

Server: nginx/1.13.12[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx 4 4 4 4 2h

......

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/nginx-3790091926 4 4 4 2h nginx 10.0.0.140:5000/nginx:1.13 app=nginx,pod-template-hash=3790091926

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx-3790091926-52wb3 1/1 Running 0 1m 192.168.7.10 10.0.0.130

po/nginx-3790091926-5hwb0 1/1 Running 0 1m 192.168.7.16 10.0.0.130

po/nginx-3790091926-jc6qs 1/1 Running 0 1m 192.168.7.17 10.0.0.130

po/nginx-3790091926-l6c38 1/1 Running 0 1m 192.168.7.18 10.0.0.130

......2. 创建Deployment资源(shell方式)

| kubectl run 资源名称 --image=镜像名称:版本 --replicas=n --record #创建deployment资源 |

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 run nginx2 --image=10.0.0.140:5000/nginx:1.13 --replicas=5 --record

deployment "nginx2" created[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx 4 4 4 4 2h

deploy/nginx2 5 5 5 5 11m

......

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/nginx-3790091926 0 0 0 2h nginx 10.0.0.140:5000/nginx:1.13 app=nginx,pod-template-hash=3790091926

rs/nginx-3996923544 4 4 4 2h nginx 10.0.0.140:5000/nginx:1.15 app=nginx,pod-template-hash=3996923544

rs/nginx2-2756484863 5 5 5 11m nginx2 10.0.0.140:5000/nginx:1.13 pod-template-hash=2756484863,run=nginx2

NAME READY STATUS RESTARTS AGE IP NODE

......

po/nginx2-2756484863-5bcrd 1/1 Running 0 11m 192.168.7.8 10.0.0.130

po/nginx2-2756484863-b4z3w 1/1 Running 0 11m 192.168.7.15 10.0.0.130

po/nginx2-2756484863-dg5xs 1/1 Running 0 11m 192.168.7.7 10.0.0.130

po/nginx2-2756484863-mh79l 1/1 Running 0 11m 192.168.7.14 10.0.0.130

po/nginx2-2756484863-vh5lh 1/1 Running 0 11m 192.168.7.9 10.0.0.1303. 基于Deployment的滚动升级

- 编辑yaml文件方式升级

| kubectl edit deployment 资源名称 #修改资源属性 |

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 edit deployment nginx

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this file will be

# reopened with the relevant failures.

......

app: nginx

spec:

containers:

- image: 10.0.0.140:5000/nginx:1.15

imagePullPolicy: IfNotPresent

name: nginx

......

[root@k8s-master ~/k8s_yaml/Deployment]# curl -sI 10.0.0.130:12874 | sed -n 1,2p

HTTP/1.1 200 OK

Server: nginx/1.15.5

[root@k8s-master ~/k8s_yaml/Deployment]# curl -sI 10.0.0.130:12874 | sed -n 1,2p

HTTP/1.1 200 OK

Server: nginx/1.13.12

[root@k8s-master ~/k8s_yaml/Deployment]# curl -sI 10.0.0.130:12874 | sed -n 1,2p

HTTP/1.1 200 OK

Server: nginx/1.13.12

[root@k8s-master ~/k8s_yaml/Deployment]# curl -sI 10.0.0.130:12874 | sed -n 1,2p

HTTP/1.1 200 OK

Server: nginx/1.15.5

[root@k8s-master ~/k8s_yaml/Deployment]# curl -sI 10.0.0.130:12874 | sed -n 1,2p

#升级过程中

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-3790091926-1strc 1/1 Running 0 10m 192.168.7.9 10.0.0.130

nginx-3790091926-6nghj 1/1 Running 0 10m 192.168.7.7 10.0.0.130

nginx-3790091926-pkb7j 1/1 Running 0 10m 192.168.7.8 10.0.0.130

nginx-3996923544-7rx4q 1/1 Running 0 45s 192.168.7.12 10.0.0.130

nginx-3996923544-zs7zp 1/1 Running 0 45s 192.168.7.11 10.0.0.130

#升级完成

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-3996923544-2szz1 1/1 Running 0 48s 192.168.7.13 10.0.0.130

nginx-3996923544-7rx4q 1/1 Running 0 1m 192.168.7.12 10.0.0.130

nginx-3996923544-tvhkd 1/1 Running 0 48s 192.168.7.10 10.0.0.130

nginx-3996923544-zs7zp 1/1 Running 0 1m 192.168.7.11 10.0.0.130

[root@k8s-master ~/k8s_yaml/Deployment]# curl -sI 10.0.0.130:12874 | sed -n 1,2p

HTTP/1.1 200 OK

Server: nginx/1.15.5

[root@k8s-master ~/k8s_yaml/Deployment]# curl -sI 10.0.0.130:12874 | sed -n 1,2p

HTTP/1.1 200 OK

Server: nginx/1.15.5

[root@k8s-master ~/k8s_yaml/Deployment]# curl -sI 10.0.0.130:12874 | sed -n 1,2p

HTTP/1.1 200 OK

Server: nginx/1.15.5[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx 4 4 4 4 2h

deploy/nginx2 5 5 5 5 9m

......

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/nginx-3790091926 0 0 0 2h nginx 10.0.0.140:5000/nginx:1.13 app=nginx,pod-template-hash=3790091926

rs/nginx-3996923544 4 4 4 2h nginx 10.0.0.140:5000/nginx:1.15 app=nginx,pod-template-hash=3996923544

rs/nginx2-2756484863 5 5 5 9m nginx2 10.0.0.140:5000/nginx:1.13 pod-template-hash=2756484863,run=nginx2

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx-3996923544-37wm7 1/1 Running 0 3m 192.168.7.11 10.0.0.130

po/nginx-3996923544-t7d53 1/1 Running 0 3m 192.168.7.12 10.0.0.130

po/nginx-3996923544-v97rl 1/1 Running 0 2m 192.168.7.13 10.0.0.130

po/nginx-3996923544-w7crv 1/1 Running 0 2m 192.168.7.18 10.0.0.130

......- shell方式升级

| kubectl set image deployment 资源名称 容器名称=镜像名称:版本 |

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 set image deploy nginx2 nginx2=10.0.0.140:5000/nginx:1.17

deployment "nginx2" image updated附:镜像nginx:1.17并不存在,因此提示失败属于正常现象

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx 4 4 4 4 2h

deploy/nginx2 5 6 2 4 15m

......

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

......

rs/nginx2-2756484863 4 4 4 15m nginx2 10.0.0.140:5000/nginx:1.13 pod-template-hash=2756484863,run=nginx2

rs/nginx2-3056115459 2 2 0 1m nginx2 10.0.0.140:5000/nginx:1.17 pod-template-hash=3056115459,run=nginx2

NAME READY STATUS RESTARTS AGE IP NODE

......

po/nginx2-3056115459-j92v9 0/1 ImagePullBackOff 0 1m 192.168.7.16 10.0.0.130

po/nginx2-3056115459-r39bh 0/1 ImagePullBackOff 0 1m 192.168.7.10 10.0.0.1304. 基于Deployment的一键回滚**

|

kubectl rollout undo deplyment 资源名称 #将deployment资源回滚至上一个版本

--to-revision=n #回滚至指定版本

kubectl rollout history deplyment 资源名称 #查看deployment资源版本信息 |

| 若升级或回滚的版本与历史记录的某条记录的版本一致,那么记录中会删除掉原有记录的序号,并为其指定新的序号(当前最新版本序号); |

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

1 <none>

2 <none>

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 set image deploy nginx2 nginx2=10.0.0.140:5000/nginx:1.15

deployment "nginx2" image updated

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 rollout history deployment nginx2

deployments "nginx2"

REVISION CHANGE-CAUSE

1 kubectl -s http://10.0.0.110:10000 run nginx2 --image=10.0.0.140:5000/nginx:1.13 --replicas=5 --record

2 kubectl -s http://10.0.0.110:10000 set image deploy nginx2 nginx2=10.0.0.140:5000/nginx:1.17

3 kubectl -s http://10.0.0.110:10000 set image deploy nginx2 nginx2=10.0.0.140:5000/nginx:1.15[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 rollout undo deployment nginxdeployment "nginx" rolled back

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 rollout undo deployment nginx2

deployment "nginx2" rolled back

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 rollout history deployment nginx

deployments "nginx"

REVISION CHANGE-CAUSE

2 <none>

3 <none>

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 rollout history deployment nginx2

deployments "nginx2"

REVISION CHANGE-CAUSE

1 kubectl -s http://10.0.0.110:10000 run nginx2 --image=10.0.0.140:5000/nginx:1.13 --replicas=5 --record

3 kubectl -s http://10.0.0.110:10000 set image deploy nginx2 nginx2=10.0.0.140:5000/nginx:1.15

4 kubectl -s http://10.0.0.110:10000 set image deploy nginx2 nginx2=10.0.0.140:5000/nginx:1.17[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 rollout undo deployment nginx2 --to-revision=1

deployment "nginx2" rolled back

[root@k8s-master ~/k8s_yaml/Deployment]# kubectl -s http://10.0.0.110:10000 rollout history deployment nginx2

deployments "nginx2"

REVISION CHANGE-CAUSE

3 kubectl -s http://10.0.0.110:10000 set image deploy nginx2 nginx2=10.0.0.140:5000/nginx:1.15

4 kubectl -s http://10.0.0.110:10000 set image deploy nginx2 nginx2=10.0.0.140:5000/nginx:1.17

5 kubectl -s http://10.0.0.110:10000 run nginx2 --image=10.0.0.140:5000/nginx:1.13 --replicas=5 --record示例:Tomcat+MySQL

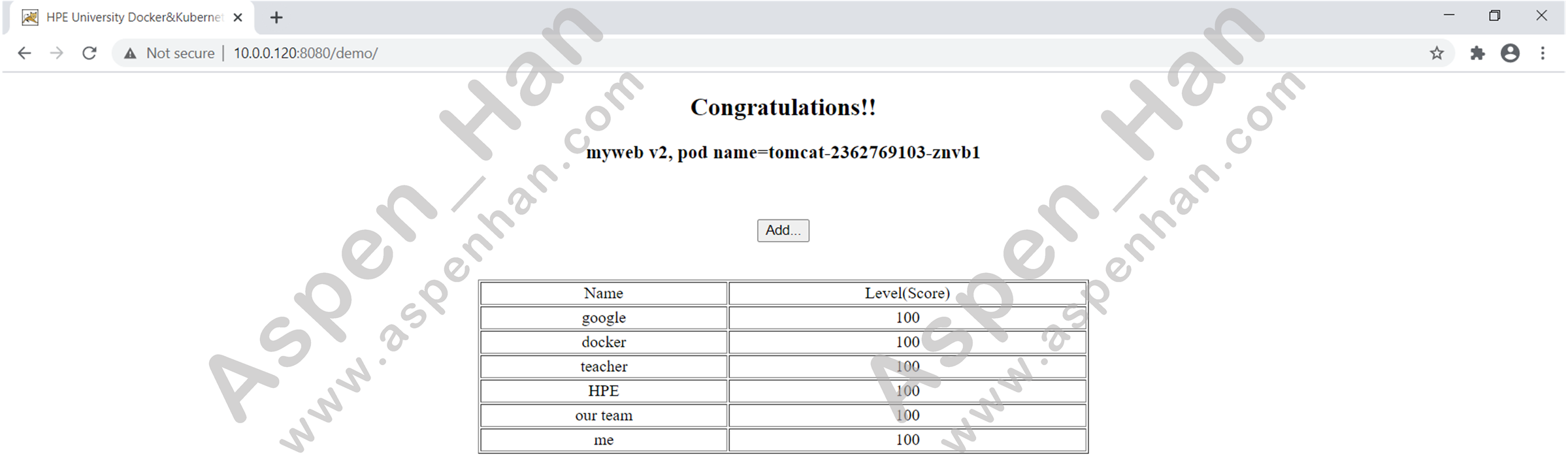

Kubernetes中,容器之间的互相访问是通过Cluster IP实现的。

# 清理环境

[root@k8s-master ~]# kubectl -s http://10.0.0.110:10000 get all -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.100.0.1 <none> 443/TCP 2m <none>1. 准备镜像

|

示例代码 提取码:omou |

[root@k8s-registry ~]# ls

anaconda-ks.cfg docker-mysql-5.7.tar.gz tomcat-app-v2.tar.gz

[root@k8s-registry ~]# for i in `ls *.tar.gz`;do docker load -i $i;done;

9e63c5bce458: Loading layer 131 MB/131 MB

......

Loaded image: docker.io/mysql:5.7

4dcab49015d4: Loading layer 130.9 MB/130.9 MB

......

Loaded image: docker.io/kubeguide/tomcat-app:v2

[root@k8s-registry ~]# docker images | grep docker.io | grep -v nginx

docker.io/registry latest 2d4f4b5309b1 5 months ago 26.2 MB

docker.io/tianyebj/pod-infrastructure latest 34d3450d733b 3 years ago 205 MB

docker.io/mysql 5.7 b7dc06006192 4 years ago 386 MB

docker.io/kubeguide/tomcat-app v2 00beaa1d956d 4 years ago 358 MB

[root@k8s-registry ~]# docker tag docker.io/mysql:5.7 10.0.0.140:5000/mysql:5.7

[root@k8s-registry ~]# docker tag docker.io/kubeguide/tomcat-app:v2 10.0.0.140:5000/tomcat-app:v2

[root@k8s-registry ~]# docker push 10.0.0.140:5000/tomcat-app:v2

The push refers to a repository [10.0.0.140:5000/tomcat-app]

......

v2: digest: sha256:dd1ecbb64640e542819303d5667107a9c162249c14d57581cd09c2a4a19095a0 size: 5719

[root@k8s-registry ~]# docker push 10.0.0.140:5000/mysql:5.7

The push refers to a repository [10.0.0.140:5000/mysql]

......

5.7: digest: sha256:e41e467ce221d6e71601bf8c167c996b3f7f96c55e7a580ef72f75fdf9289501 size: 2616

[root@k8s-registry ~]# docker images| grep -v docker.io | grep -v nginx

REPOSITORY TAG IMAGE ID CREATED SIZE

10.0.0.140:5000/pod-infrastructure latest 34d3450d733b 3 years ago 205 MB

10.0.0.140:5000/mysql 5.7 b7dc06006192 4 years ago 386 MB

10.0.0.140:5000/tomcat-app v2 00beaa1d956d 4 years ago 358 MB2. MySQL-deployment资源文件

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: mysql

spec:

replicas: 1

minReadySeconds: 30

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: 10.0.0.140:5000/mysql:5.7

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'[root@k8s-master ~]# cd k8s_yaml/

[root@k8s-master ~/k8s_yaml]# vim k8s_deploy_mysql.yaml

[root@k8s-master ~/k8s_yaml]# kubectl -s http://10.0.0.110:10000 create -f k8s_deploy_mysql.yaml

deployment "mysql" created

[root@k8s-master ~/k8s_yaml]# kubectl -s http://10.0.0.110:10000 get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

mysql 1 1 1 1 53s

[root@k8s-master ~/k8s_yaml]# kubectl -s http://10.0.0.110:10000 get rs

NAME DESIRED CURRENT READY AGE

mysql-3158564321 1 1 1 1m

[root@k8s-master ~/k8s_yaml]# kubectl -s http://10.0.0.110:10000 get pod

NAME READY STATUS RESTARTS AGE

mysql-3158564321-657d6 1/1 Running 0 1m3. MySQL-service资源文件

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- port: 3306

targetPort: 3306

selector:

app: mysql[root@k8s-master ~/k8s_yaml]# kubectl -s http://10.0.0.110:10000 get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

mysql-3158564321-657d6 1/1 Running 0 6m app=mysql,pod-template-hash=3158564321

[root@k8s-master ~/k8s_yaml]# kubectl -s http://10.0.0.110:10000 create -f k8s_svc_mysql.yaml

service "mysql" created

[root@k8s-master ~/k8s_yaml]# kubectl -s http://10.0.0.110:10000 get svc mysql -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

mysql 10.100.223.213 <none> 3306/TCP 1m app=mysql4. Tomcat-deployment资源文件

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: tomcat

spec:

replicas: 3

minReadySeconds: 30

template:

metadata:

labels:

app: tomcat

spec:

containers:

- name: tomcat

image: 10.0.0.140:5000/tomcat-app:v2

ports:

- containerPort: 8080

env:

- name: MYSQL_SERVICE_HOST

value: '10.100.223.213'

- name: MYSQL_SERVICE_PORT

value: '3306'[root@k8s-master ~/k8s_yaml]# vim k8s_deploy_tomcat.yaml

[root@k8s-master ~/k8s_yaml]# kubectl -s http://10.0.0.110:10000 create -f k8s_deploy_tomcat.yaml

deployment "tomcat" created

[root@k8s-master ~/k8s_yaml]# kubectl -s http://10.0.0.110:10000 get deploy

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

mysql 1 1 1 1 32m

tomcat 3 3 3 1 1m

[root@k8s-master ~/k8s_yaml]# kubectl -s http://10.0.0.110:10000 get rs -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

mysql-3158564321 1 1 1 32m mysql 10.0.0.140:5000/mysql:5.7 app=mysql,pod-template-hash=3158564321

tomcat-2362769103 3 3 3 1m tomcat 10.0.0.140:5000/tomcat-app:v2 app=tomcat,pod-template-hash=2362769103

[root@k8s-master ~/k8s_yaml]# kubectl -s http://10.0.0.110:10000 get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

mysql-3158564321-657d6 1/1 Running 0 35m 192.168.7.2 10.0.0.130

tomcat-2362769103-8dkqj 1/1 Running 0 3m 192.168.16.2 10.0.0.120

tomcat-2362769103-cs5gw 1/1 Running 0 3m 192.168.16.3 10.0.0.120

tomcat-2362769103-znvb1 1/1 Running 0 3m 192.168.7.3 10.0.0.1305. Tomcat-service资源文件

apiVersion: v1

kind: Service

metadata:

name: tomcat

spec:

type: NodePort

ports:

- port: 8080

targetPort: 8080

nodePort: 8080

selector:

app: tomcat[root@k8s-master ~/k8s_yaml]# vim k8s_svc_tomcat.yaml

[root@k8s-master ~/k8s_yaml]# kubectl -s http://10.0.0.110:10000 create -f k8s_svc_tomcat.yaml

service "tomcat" created

[root@k8s-master ~/k8s_yaml]# kubectl -s http://10.0.0.110:10000 get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

mysql-3158564321-657d6 1/1 Running 0 42m app=mysql,pod-template-hash=3158564321

tomcat-2362769103-8dkqj 1/1 Running 0 11m app=tomcat,pod-template-hash=2362769103

tomcat-2362769103-cs5gw 1/1 Running 0 11m app=tomcat,pod-template-hash=2362769103

tomcat-2362769103-znvb1 1/1 Running 0 11m app=tomcat,pod-template-hash=2362769103

[root@k8s-master ~/k8s_yaml]# kubectl -s http://10.0.0.110:10000 get svc -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes 10.100.0.1 <none> 443/TCP 1h <none>

mysql 10.100.223.213 <none> 3306/TCP 32m app=mysql

tomcat 10.100.66.64 <nodes> 8080:8080/TCP 1m app=tomcat检测

[root@k8s-master ~/k8s_yaml]# kubectl -s http://10.0.0.110:10000 exec -it mysql-3158564321-657d6 /bin/bash

root@mysql-3158564321-657d6:/# mysql -uroot -p123456

......

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| HPE_APP |

| mysql |

| performance_schema |

| sys |

+--------------------+

5 rows in set (0.00 sec)

mysql> use HPE_APP;

mysql> show tables;

+-------------------+

| Tables_in_HPE_APP |

+-------------------+

| T_USERS |

+-------------------+

1 row in set (0.00 sec)

mysql> select * from T_USERS;

+----+-----------+-------+

| ID | USER_NAME | LEVEL |

+----+-----------+-------+

| 1 | me | 100 |

| 2 | our team | 100 |

| 3 | HPE | 100 |

| 4 | teacher | 100 |

| 5 | docker | 100 |

| 6 | google | 100 |

+----+-----------+-------+

6 rows in set (0.00 sec)

mysql> select * from T_USERS order by ID limit 6,3;

+----+-----------+-------+

| ID | USER_NAME | LEVEL |

+----+-----------+-------+

| 10 | aspen | 101 |

| 11 | young | 102 |

| 12 | han | 103 |

+----+-----------+-------+

3 rows in set (0.00 sec)