一、 DNS服务

k8s集群中DNS服务主要用于将Service资源的名称解析成对应的Cluster IP地址。

| DNS服务部署完毕后,现有Pod资源不会加载DNS资源,仅新创建的Pod资源会引用DNS服务。 |

1. 安装DNS服务

|

DNS组件镜像及配置文件 提取码:7t91 |

准备镜像

|

docker load -i 镜像包 docker tag 镜像名称:版本 仓库地址:端口/镜像名称:版本 docker push 仓库地址:端口/镜像名称:版本 |

[root@k8s-registry ~]# docker load -i docker_k8s_dns.tar.gz

......

Loaded image: gcr.io/google_containers/kubedns-amd64:1.9

......

Loaded image: gcr.io/google_containers/kube-dnsmasq-amd64:1.4

......

Loaded image: gcr.io/google_containers/dnsmasq-metrics-amd64:1.0

......

Loaded image: gcr.io/google_containers/exechealthz-amd64:1.2

[root@k8s-registry ~]# docker tag gcr.io/google_containers/kubedns-amd64:1.9 10.0.0.140:5000/kubedns-amd64:1.9

[root@k8s-registry ~]# docker tag gcr.io/google_containers/kube-dnsmasq-amd64:1.4 10.0.0.140:5000/kube-dnsmasq-amd64:1.4

[root@k8s-registry ~]# docker tag gcr.io/google_containers/dnsmasq-metrics-amd64:1.0 10.0.0.140:5000/dnsmasq-metrics-amd64:1.0

[root@k8s-registry ~]# docker tag gcr.io/google_containers/exechealthz-amd64:1.2 10.0.0.140:5000/exechealthz-amd64:1.2

[root@k8s-registry ~]# docker push 10.0.0.140:5000/exechealthz-amd64:1.2

......

1.2: digest: sha256:34722333f0cd0b891b61c9e0efa31913f22157e341a3aabb79967305d4e78260 size: 1358

[root@k8s-registry ~]# docker push 10.0.0.140:5000/dnsmasq-metrics-amd64:1.0

......

1.0: digest: sha256:4767af0aee3355cdac7abfe0d7ac1432492f088e7fcf07128d38cd2f23268638 size: 1357

[root@k8s-registry ~]# docker push 10.0.0.140:5000/kube-dnsmasq-amd64:1.4

......

1.4: digest: sha256:f7590551a628ec30ab47f188040f51c61e53e969a19ef44846754ba376b4ce21 size: 1563

[root@k8s-registry ~]# docker push 10.0.0.140:5000/kubedns-amd64:1.9

......

1.9: digest: sha256:df5392e5c76d8519301d1d2ee582453fd9185572bcd44dc0da466b9ab220c985 size: 945准备配置文件

# skydns deployment资源

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

spec:

replicas: 2

strategy: #deploy滚动升级策略

rollingUpdate:

maxSurge: 10%

maxUnavailable: 0

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

scheduler.alpha.kubernetes.io/tolerations: '[{"key":"CriticalAddonsOnly", "operator":"Exists"}]'

spec:

nodeName: 10.0.0.130 #将容器调度到指定节点

# 仅为演示参数功能,实际中并不建议使用

containers:

- name: kubedns

image: 10.0.0.140:5000/kubedns-amd64:1.9

resources:

limits:

memory: 308Mi

requests:

cpu: 100m

memory: 200Mi

livenessProbe:

# 健康检查

httpGet:

path: /healthz-kubedns

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

# 可用性检查

httpGet:

path: /readiness

port: 8081

scheme: HTTP

initialDelaySeconds: 3

timeoutSeconds: 5

args:

- --domain=cluster.local

- --dns-port=10053

- --config-map=kube-dns

- --kube-master-url=http://10.0.0.110:10000 #指向apiServer的地址

- --v=0

env:

- name: PROMETHEUS_PORT

value: "10055"

ports:

- containerPort: 10053

name: dns-local

protocol: UDP

- containerPort: 10053

name: dns-tcp-local

protocol: TCP

- containerPort: 10055

name: metrics

protocol: TCP

- name: dnsmasq

image: 10.0.0.140:5000/kube-dnsmasq-amd64:1.4

livenessProbe:

httpGet:

path: /healthz-dnsmasq

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- --cache-size=1000

- --no-resolv

- --server=127.0.0.1#10053

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

resources:

requests:

cpu: 150m

memory: 10Mi

- name: dnsmasq-metrics

image: 10.0.0.140:5000/dnsmasq-metrics-amd64:1.0

livenessProbe:

httpGet:

path: metrics

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- --v=2

- --logtostderr

ports:

- containerPort: 10054

name: metrics

protocol: TCP

resources:

requests:

memory: 10Mi

- name: healthz

image: 10.0.0.140:5000/exechealthz-amd64:1.2

resources:

limits:

memory: 50Mi

requests:

cpu: 10m

memory: 50Mi

args:

- --cmd=nslookup kubernetes.default.svc.cluster.local 127.0.0.1 >/dev/null

- --url=/healthz-dnsmasq

- --cmd=nslookup kubernetes.default.svc.cluster.local 127.0.0.1:10053 >/dev/null

- --url=healthz-kubedns

- --port=8080

- --quiet

ports:

- containerPort: 8080

protocol: TCP

dnsPolicy: Default[root@k8s-master ~]# mkdir k8s_yaml/dns && cd k8s_yaml/dns

[root@k8s-master ~/k8s_yaml/dns]# kubectl -s http://10.0.0.110:10000 get node

NAME STATUS AGE

10.0.0.120 Ready 23h

10.0.0.130 Ready 23h

[root@k8s-master ~/k8s_yaml/dns]# cat skydns-deploy.yml

[root@k8s-master ~/k8s_yaml/dns]# kubectl -s http://10.0.0.110:10000 create -f skydns-deploy.yml

deployment "kube-dns" created

[root@k8s-master ~/k8s_yaml/dns]# kubectl -s http://10.0.0.110:10000 get all -o wide -n kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 2 2 2 2 34s

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/kube-dns-2134347918 2 2 2 34s kubedns,dnsmasq,dnsmasq-metrics,healthz 10.0.0.140:5000/kubedns-amd64:1.9,10.0.0.140:5000/kube-dnsmasq-amd64:1.4,10.0.0.140:5000/dnsmasq-metrics-amd64:1.0,10.0.0.140:5000/exechealthz-amd64:1.2 k8s-app=kube-dns,pod-template-hash=2134347918

NAME READY STATUS RESTARTS AGE IP NODE

po/kube-dns-2134347918-d78jc 4/4 Running 0 34s 192.168.7.3 10.0.0.130

po/kube-dns-2134347918-nvd49 4/4 Running 0 34s 192.168.7.2 10.0.0.130

[root@k8s-master ~/k8s_yaml/dns]# kubectl -s http://10.0.0.110:10000 get all -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.100.0.1 <none> 443/TCP 21h <none># skydns svc资源

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "KubeDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.100.1.1

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP[root@k8s-master ~/k8s_yaml/dns]# cat skydns-svc.yml

[root@k8s-master ~/k8s_yaml/dns]# kubectl -s http://10.0.0.110:10000 get deploy -n kube-system --show-labels

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE LABELS

kube-dns 2 2 2 2 13m k8s-app=kube-dns,kubernetes.io/cluster-service=true

[root@k8s-master ~/k8s_yaml/dns]# kubectl -s http://10.0.0.110:10000 get pod -n kube-system --show-labels

NAME READY STATUS RESTARTS AGE LABELS

kube-dns-2134347918-d78jc 4/4 Running 0 13m k8s-app=kube-dns,pod-template-hash=2134347918

kube-dns-2134347918-nvd49 4/4 Running 0 13m k8s-app=kube-dns,pod-template-hash=2134347918

[root@k8s-master ~/k8s_yaml/dns]# kubectl -s http://10.0.0.110:10000 create -f skydns-svc.yml

service "kube-dns" created

[root@k8s-master ~/k8s_yaml/dns]# kubectl -s http://10.0.0.110:10000 get svc -n kube-system -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kube-dns 10.100.1.1 <none> 53/UDP,53/TCP 56s k8s-app=kube-dns

[root@k8s-master ~/k8s_yaml/dns]# kubectl -s http://10.0.0.110:10000 get all -n kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 2 2 2 2 16m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kube-dns 10.100.1.1 <none> 53/UDP,53/TCP 26s

NAME DESIRED CURRENT READY AGE

rs/kube-dns-2134347918 2 2 2 16m

NAME READY STATUS RESTARTS AGE

po/kube-dns-2134347918-d78jc 4/4 Running 0 16m

po/kube-dns-2134347918-nvd49 4/4 Running 0 16m2. 修改kubelet参数

所有node节点kubelet服务配置文件都需更改

|

#/etc/kubernetes/kubelet KUBELET_ARGS="--cluster_dns=IP地址 --cluster_domain=cluster.local" #指定k8s集群DNS服务的Cluster IP地址 |

[root@k8s-node01 ~]# tail -2 /etc/kubernetes/kubelet

# Add your own!

KUBELET_ARGS="--cluster_dns=10.100.1.1 --cluster_domain=cluster.local"[root@k8s-node02 ~]# tail -2 /etc/kubernetes/kubelet

# Add your own!

KUBELET_ARGS="--cluster_dns=10.100.1.1 --cluster_domain=cluster.local"| systemctl restart kubelet.service |

[root@k8s-node01 ~]# systemctl restart kubelet.service [root@k8s-node02 ~]# systemctl restart kubelet.service3. 验证DNS服务

[root@k8s-master ~]# cd k8s_yaml/

[root@k8s-master ~/k8s_yaml]# mkdir tomcat+mysql

[root@k8s-master ~/k8s_yaml]# mv *.yaml ./tomcat+mysql/

[root@k8s-master ~/k8s_yaml/tomcat+mysql]# ls

k8s_deploy_mysql.yaml k8s_deploy_tomcat.yaml k8s_svc_mysql.yaml k8s_svc_tomcat.yaml

[root@k8s-master ~/k8s_yaml/tomcat+mysql]# tail -5 k8s_deploy_tomcat.yaml

env:

- name: MYSQL_SERVICE_HOST

value: 'mysql'

- name: MYSQL_SERVICE_PORT

value: '3306'

[root@k8s-master ~/k8s_yaml/tomcat+mysql]# kubectl -s http://10.0.0.110:10000 get all

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.100.0.1 <none> 443/TCP 22h

[root@k8s-master ~/k8s_yaml/tomcat+mysql]# kubectl -s http://10.0.0.110:10000 create -f .

deployment "mysql" created

deployment "tomcat" created

service "mysql" created

service "tomcat" created

[root@k8s-master ~/k8s_yaml/tomcat+mysql]# curl -sI 10.0.0.120:8080/demo/ | sed -n 1,2p

HTTP/1.1 200 OK

Server: Apache-Coyote/1.1

[root@k8s-master ~/k8s_yaml/tomcat+mysql]# kubectl -s http://10.0.0.110:10000 get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/mysql 1 1 1 1 5m

deploy/tomcat 3 3 3 3 5m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.100.0.1 <none> 443/TCP 23h <none>

svc/mysql 10.100.117.159 <none> 3306/TCP 5m app=mysql

svc/tomcat 10.100.229.229 <nodes> 8080:8080/TCP 5m app=tomcat

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/mysql-3158564321 1 1 1 5m mysql 10.0.0.140:5000/mysql:5.7 app=mysql,pod-template-hash=3158564321

rs/tomcat-627834460 3 3 3 5m mysql 10.0.0.140:5000/tomcat-app:v2 app=tomcat,pod-template-hash=627834460

NAME READY STATUS RESTARTS AGE IP NODE

po/mysql-3158564321-r31zd 1/1 Running 0 5m 192.168.16.2 10.0.0.120

po/tomcat-627834460-0slgc 1/1 Running 0 5m 192.168.16.3 10.0.0.120

po/tomcat-627834460-6bdcg 1/1 Running 0 5m 192.168.7.4 10.0.0.130

po/tomcat-627834460-mxb3r 1/1 Running 0 5m 192.168.16.4 10.0.0.120[root@k8s-master ~/k8s_yaml/tomcat+mysql]# kubectl -s http://10.0.0.110:10000 exec -it tomcat-627834460-0slgc /bin/bash

root@tomcat-627834460-0slgc:/usr/local/tomcat# cat /etc/resolv.conf

search default.svc.cluster.local svc.cluster.local cluster.local

nameserver 10.100.1.1

nameserver 10.0.0.254

options ndots:5

root@tomcat-627834460-0slgc:/usr/local/tomcat# ping baidu.com -c1

PING baidu.com (220.181.38.148): 56 data bytes

64 bytes from 220.181.38.148: icmp_seq=0 ttl=127 time=8.948 ms

--- baidu.com ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max/stddev = 8.948/8.948/8.948/0.000 ms

root@tomcat-627834460-0slgc:/usr/local/tomcat# ping mysql -c1 -w1

PING mysql.default.svc.cluster.local (10.100.117.159): 56 data bytes

--- mysql.default.svc.cluster.local ping statistics ---

1 packets transmitted, 0 packets received, 100% packet loss二、 namespace

k8s集群的namespace资源,用于实现k8s集群的资源隔离。

|

kubectl get namespace #查看当前k8s集群namespace信息 kubectl get all -n namespace名称 #查看指定namespace空间的所有资源 kubectl get all --namespace namespace名称 #查看指定namespace空间的所有资源 kubectl create namespace namespace名称 #创建namespace资源 |

[root@k8s-master ~]# kubectl -s http://10.0.0.110:10000 get namespace

NAME STATUS AGE

default Active 361d

kube-system Active 361d|

# 资源指定namespace属性 apiVersion: 版本 kind: 资源类型 metadata:

namespace: 名称

name: 名称

spec: ......

|

# Tomcat + MySQL示例

[root@k8s-master ~]# cd k8s_yaml/tomcat+mysql/

[root@k8s-master ~/k8s_yaml/tomcat+mysql]# sed -i '3a \ \ namespace: tomcat' *

[root@k8s-master ~/k8s_yaml/tomcat+mysql]# head -5 *

==> k8s_deploy_mysql.yaml <==

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

namespace: tomcat

name: mysql

==> k8s_deploy_tomcat.yaml <==

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

namespace: tomcat

name: tomcat

==> k8s_svc_mysql.yaml <==

apiVersion: v1

kind: Service

metadata:

namespace: tomcat

name: mysql

==> k8s_svc_tomcat.yaml <==

apiVersion: v1

kind: Service

metadata:

namespace: tomcat

name: tomcat[root@k8s-master ~/k8s_yaml/tomcat+mysql]# kubectl -s http://10.0.0.110:10000 create namespace tomcat

namespace "tomcat" created

[root@k8s-master ~/k8s_yaml/tomcat+mysql]# kubectl -s http://10.0.0.110:10000 get namespace

NAME STATUS AGE

default Active 361d

kube-system Active 361d

tomcat Active 10s

[root@k8s-master ~/k8s_yaml/tomcat+mysql]# kubectl -s http://10.0.0.110:10000 create -f .

deployment "mysql" created

deployment "tomcat" created

service "mysql" created

service "tomcat" created

[root@k8s-master ~/k8s_yaml/tomcat+mysql]# kubectl -s http://10.0.0.110:10000 get all -n tomcat

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/mysql 1 1 1 0 13s

deploy/tomcat 3 3 3 0 13s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/mysql 10.100.82.28 <none> 3306/TCP 13s

svc/tomcat 10.100.30.178 <nodes> 8080:8080/TCP 13s

NAME DESIRED CURRENT READY AGE

rs/mysql-3158564321 1 1 1 13s

rs/tomcat-627834460 3 3 3 13s

NAME READY STATUS RESTARTS AGE

po/mysql-3158564321-xxs1g 1/1 Running 0 13s

po/tomcat-627834460-ft2jh 1/1 Running 0 13s

po/tomcat-627834460-j64q0 1/1 Running 0 13s

po/tomcat-627834460-vkssq 1/1 Running 0 13s三、 健康检查

1. 探针的种类

-

livenessProbe - 健康状态检查

健康状态检查:周期性检查服务是否存活,若检查结果失败,将重启容器。

-

readinessProbe - 可用性检查

可用性检查:周期性检查服务是否可用,若服务不可用,则把该业务节点从service的endpoints中移除。

|

apiVersion: 版本 kind: 资源类型 metadata:

name: 名称

spec:

replicas: n

template:

metadata:

labels:

app: 名称

spec:

containers:

- name: 名称

image: 镜像仓库:端口/镜像名称:版本

livenessProbe:

探针检测方法:

......

initialDelaySeconds: n #初始化健康检查间隔时间(单位: 秒)

periodSeconds: n #健康检查执行周期(单位: 秒)

timeoutSeconds: n #健康检查超时时间(单位: 秒)

successThreshold: n #健康检查成功阈值(单位: 次)

failureThreshold: n #健康检查失败阈值(单位: 次)

readinessProbe:

探针检测方法:

......

initialDelaySeconds: n #初始化可用性检查间隔时间(单位: 秒)

periodSeconds: n #可用性检查执行周期(单位: 秒)

timeoutSeconds: n #可用性检查超时时间(单位: 秒)

successThreshold: n #可用性检查成功阈值(单位: 次)

failureThreshold: n #可用性检查失败阈值(单位: 次)

|

2. 探针的检测方法

- exec: 执行一段命令;返回值0,非0。

- httpGet: 检测HTTP请求返回的健康状态代码(2xx,3xx为正常状态;4xx,5xx为错误状态);实际环境中使用较多,因为网站业务适合运行在k8s集群环境上。

- tcpSocket: 测试某个端口是否可以连接;实际环境中使用较少,端口正常无法代表服务正常。

3. 核心参数

-

initialDelaySeconds

初始化探针启动间隔时间:等待Pod处于running状态后的n秒开始启动探针;防止因业务启动时间较长而造成的健康检查失败,以及尽而导致的死循环。因此initialDelaySeconds设置的时长一定要大于Pod内业务完全启动的所需的时间;

-

periodSeconds

探针执行周期:探针执行的时间间隔;

-

timeoutSeconds

探针检查超时时间:探针执行等待结果的最大时间,超过门限值,则视为失败。默认值为1。

-

successThreshold

探针检查成功阈值:探针执行检查成功多少次,即为检测成功。默认值为1。

-

failureThreshold

探针检查失败阈值:探针执行检查失败多少次,即为检测失败。默认值为3。

4. livenessProbe

exec

apiVersion: v1

kind: Pod

metadata:

name: exec-liveness

spec:

containers:

- name: nginx

image: 10.0.0.140:5000/nginx:1.13

ports:

- containerPort: 80

args:

- /bin/sh

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600;

livenessProbe:

exec:

command:

- cat

- /tmp/healthy

initialDelaySeconds: 5

periodSeconds: 5

timeoutSeconds: 3

successThreshold: 1

failureThreshold: 3 [root@k8s-master ~/k8s_yaml/tomcat+mysql]# mkdir ~/k8s_yaml/healthy && cd ../healthy

[root@k8s-master ~/k8s_yaml/healthy]# cat liveness-exec.yaml

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 create -f liveness-exec.yaml

pod "exec-liveness" created

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 describe pod exec-liveness | tail -5

1m 17s 2 {kubelet 10.0.0.120} spec.containers{nginx} Normal Pulled Container image "10.0.0.140:5000/nginx:1.13" already present on machine

17s 17s 1 {kubelet 10.0.0.120} spec.containers{nginx} Normal Killing Killing container with docker id ca8c9ba97501: pod "exec-liveness_default(800f963c-e2c1-11eb-9de1-000c29870b5c)" container "nginx" is unhealthy, it will be killed and re-created.

17s 17s 1 {kubelet 10.0.0.120} spec.containers{nginx} Normal Created Created container with docker id c30a501185be; Security:[seccomp=unconfined]

17s 17s 1 {kubelet 10.0.0.120} spec.containers{nginx} Normal Started Started container with docker id c30a501185be

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 get pod

NAME READY STATUS RESTARTS AGE

exec-liveness 0/1 CrashLoopBackOff 17 53m

httpget-liveness 1/1 Running 1 36m

test-2416581181-9fqr6 2/2 Running 22 236d

test-2416581181-jzl5l 2/2 Running 22 236dhttpGet

apiVersion: v1

kind: Pod

metadata:

name: httpget-liveness

spec:

containers:

- name: nginx

image: 10.0.0.140:5000/nginx:1.13

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: /index.html

port: 80

initialDelaySeconds: 5

periodSeconds: 5[root@k8s-master ~/k8s_yaml/healthy]# cat liveness-httpget.yaml

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 create -f liveness-httpget.yaml

pod "httpget-liveness" created

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 describe pod httpget-liveness | grep Liveness

Liveness: http-get http://:80/index.html delay=5s timeout=1s period=5s #success=1 #failure=3 #默认阈值

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 describe pod httpget-liveness | tail -4

33m 33m 1 {default-scheduler } Normal Scheduled Successfully assigned httpget-liveness to 10.0.0.120

33m 33m 1 {kubelet 10.0.0.120} spec.containers{nginx} Normal Pulled Container image "10.0.0.140:5000/nginx:1.13" already present on machine

33m 33m 1 {kubelet 10.0.0.120} spec.containers{nginx} Normal Created Created container with docker id 102651b9f1c4; Security:[seccomp=unconfined]

33m 33m 1 {kubelet 10.0.0.120} spec.containers{nginx} Normal Started Started container with docker id 102651b9f1c4

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 exec -it 102651b9f1c4 /bin/bash

Error from server (NotFound): pods "102651b9f1c4" not found

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 exec -it httpget-liveness /bin/bash

root@httpget-liveness:/# rm -f /usr/share/nginx/html/index.html

root@httpget-liveness:/# exit

exit

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 describe pod httpget-liveness | tail -4

22s 12s 3 {kubelet 10.0.0.120} spec.containers{nginx} Warning Unhealthy Liveness probe failed: HTTP probe failed with statuscode: 404

12s 12s 1 {kubelet 10.0.0.120} spec.containers{nginx} Normal Killing Killing container with docker id 102651b9f1c4: pod "httpget-liveness_default(f81d0b80-e2c3-11eb-9de1-000c29870b5c)" container "nginx" is unhealthy, it will be killed and re-created.

12s 12s 1 {kubelet 10.0.0.120} spec.containers{nginx} Normal Created Created container with docker id 7cca27d50894; Security:[seccomp=unconfined]

12s 12s 1 {kubelet 10.0.0.120} spec.containers{nginx} Normal Started Started container with docker id 7cca27d50894tcpSocket

apiVersion: v1

kind: Pod

metadata:

name: tcpsocket-liveness

spec:

containers:

- name: nginx

image: 10.0.0.140:5000/nginx:1.13

ports:

- containerPort: 80

args:

- /bin/bash

- -c

- tail -f /etc/hosts

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 10

periodSeconds: 3[root@k8s-master ~/k8s_yaml/healthy]# cat liveness-socket.yml

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 create -f liveness-socket.yml

pod "tcpsocket-liveness" created

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 exec -it tcpsocket-liveness /bin/bash

root@tcpsocket-liveness:/# service nginx start

root@tcpsocket-liveness:/#

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 describe pod httpget-liveness | tail -4

39m 39m 3 {kubelet 10.0.0.120} spec.containers{nginx} Warning Unhealthy Liveness probe failed: HTTP probe failed with statuscode: 404

39m 39m 1 {kubelet 10.0.0.120} spec.containers{nginx} Normal Killing Killing container with docker id 102651b9f1c4: pod "httpget-liveness_default(f81d0b80-e2c3-11eb-9de1-000c29870b5c)" container "nginx" is unhealthy, it will be killed and re-created.

39m 39m 1 {kubelet 10.0.0.120} spec.containers{nginx} Normal Created Created container with docker id 7cca27d50894; Security:[seccomp=unconfined]

39m 39m 1 {kubelet 10.0.0.120} spec.containers{nginx} Normal Started Started container with docker id 7cca27d508945. readinessProbe

httpGet

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: httpget-readiness

spec:

replicas: 4

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: 10.0.0.140:5000/nginx:1.13

ports:

- containerPort: 80

readinessProbe:

httpGet:

path: /Young.html

port: 80

initialDelaySeconds: 5

periodSeconds: 3

timeoutSeconds: 1[root@k8s-master ~/k8s_yaml/healthy]# cat readiness-httpGet.yml

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 create -f readiness-httpGet.yml

deployment "httpget-readiness" created

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

httpget-readiness 4 4 4 0 1m

test 2 2 2 2 236d

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 get pod | grep readiness

httpget-readiness-1081103140-bg527 0/1 Running 0 1m

httpget-readiness-1081103140-npwbw 0/1 Running 0 1m

httpget-readiness-1081103140-qk0m8 0/1 Running 0 1m

httpget-readiness-1081103140-w9986 0/1 Running 0 1m [root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 exec -it httpget-readiness-1081103140-bg527 /bin/bash

root@httpget-readiness-1081103140-bg527:/# echo "Testing 123" >/usr/share/nginx/html/Young.html

root@httpget-readiness-1081103140-bg527:/# exit

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 exec -it httpget-readiness-1081103140-npwbw /bin/bash

root@httpget-readiness-1081103140-npwbw:/# echo "Testing 123" >/usr/share/nginx/html/Young.html

root@httpget-readiness-1081103140-npwbw:/# exit

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

httpget-readiness 4 4 4 2 5m

test 2 2 2 2 236d

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 get pod -o wide| grep readiness

httpget-readiness-1081103140-bg527 1/1 Running 0 7m 192.168.45.6 10.0.0.130

httpget-readiness-1081103140-npwbw 1/1 Running 0 7m 192.168.56.8 10.0.0.120

httpget-readiness-1081103140-qk0m8 0/1 Running 0 7m 192.168.45.7 10.0.0.130

httpget-readiness-1081103140-w9986 0/1 Running 0 7m 192.168.56.9 10.0.0.120

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 expose deploy httpget-readiness --port=80 --name=

readiness-httpget-svc

service "readiness-httpget-svc" exposed

[root@k8s-master ~/k8s_yaml/healthy]# kubectl -s http://10.0.0.110:10000 describe svc readiness-httpget-svc

Name: readiness-httpget-svc

Namespace: default

Labels: app=nginx

Selector: app=nginx

Type: ClusterIP

IP: 10.100.243.225

Port: 80/TCP

Endpoints: 192.168.45.6:80,192.168.56.8:80

Session Affinity: None

No events. 四、 Dashboard服务

|

Dashboard服务镜像文件 提取码:3lhv |

step 1 上传并导入镜像

[root@k8s-registry ~]# rz -E

rz waiting to receive.

[root@k8s-registry ~]# ls kubernetes-dashboard-amd64_v1.4.1.tar.gz

kubernetes-dashboard-amd64_v1.4.1.tar.gz

[root@k8s-registry ~]# docker load -i kubernetes-dashboard-amd64_v1.4.1.tar.gz

5f70bf18a086: Loading layer 1.024 kB/1.024 kB

2e350fa8cbdf: Loading layer 86.96 MB/86.96 MB

Loaded image: index.tenxcloud.com/google_containers/kubernetes-dashboard-amd64:v1.4.1step 2 为镜像设置标签

[root@k8s-registry ~]# docker tag index.tenxcloud.com/google_containers/kubernetes-dashboard-amd64:v1.4.1 10.0.0.140:5000/kubernetes-dashboard-amd64:v1.4.1

[root@k8s-registry ~]# docker push 10.0.0.140:5000/kubernetes-dashboard-amd64:v1.4.1

The push refers to a repository [10.0.0.140:5000/kubernetes-dashboard-amd64]

5f70bf18a086: Mounted from kubedns-amd64

2e350fa8cbdf: Pushed

v1.4.1: digest: sha256:e446d645ff6e6b3147205c58258c2fb431105dc46998e4d742957623bf028014 size: 1147step 3 创建dashboard配置文件并应用

# Dashboard服务Deployment资源

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

# Keep the name in sync with image version and

# gce/coreos/kube-manifests/addons/dashboard counterparts

name: kubernetes-dashboard-latest

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

version: latest

kubernetes.io/cluster-service: "true"

spec:

containers:

- name: kubernetes-dashboard

image: 10.0.0.140:5000/kubernetes-dashboard-amd64:v1.4.1

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 100m

memory: 50Mi

ports:

- containerPort: 9090

args:

- --apiserver-host=http://10.0.0.110:10000

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30# Dashboard服务Service资源

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 80

targetPort: 9090[root@k8s-master ~/k8s_yaml/healthy]# mkdir ../dashboard && cd ../dashboard

[root@k8s-master ~/k8s_yaml/dashboard]# cat dashboard.yaml

[root@k8s-master ~/k8s_yaml/dashboard]# cat dashboard-svc.yaml

[root@k8s-master ~/k8s_yaml/dashboard]# kubectl -s http://10.0.0.110:10000 create -f .

service "kubernetes-dashboard" created

deployment "kubernetes-dashboard-latest" created

[root@k8s-master ~/k8s_yaml/dashboard]# kubectl -s http://10.0.0.110:10000 get all -n kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 2 2 2 2 237d

deploy/kubernetes-dashboard-latest 1 1 1 1 1m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kube-dns 10.100.1.1 <none> 53/UDP,53/TCP 237d

svc/kubernetes-dashboard 10.100.154.244 <none> 80/TCP 1m

NAME DESIRED CURRENT READY AGE

rs/kube-dns-2134347918 2 2 2 237d

rs/kubernetes-dashboard-latest-2686154000 1 1 1 1m

NAME READY STATUS RESTARTS AGE

po/kube-dns-2134347918-d78jc 4/4 Running 44 237d

po/kube-dns-2134347918-nvd49 4/4 Running 44 237d

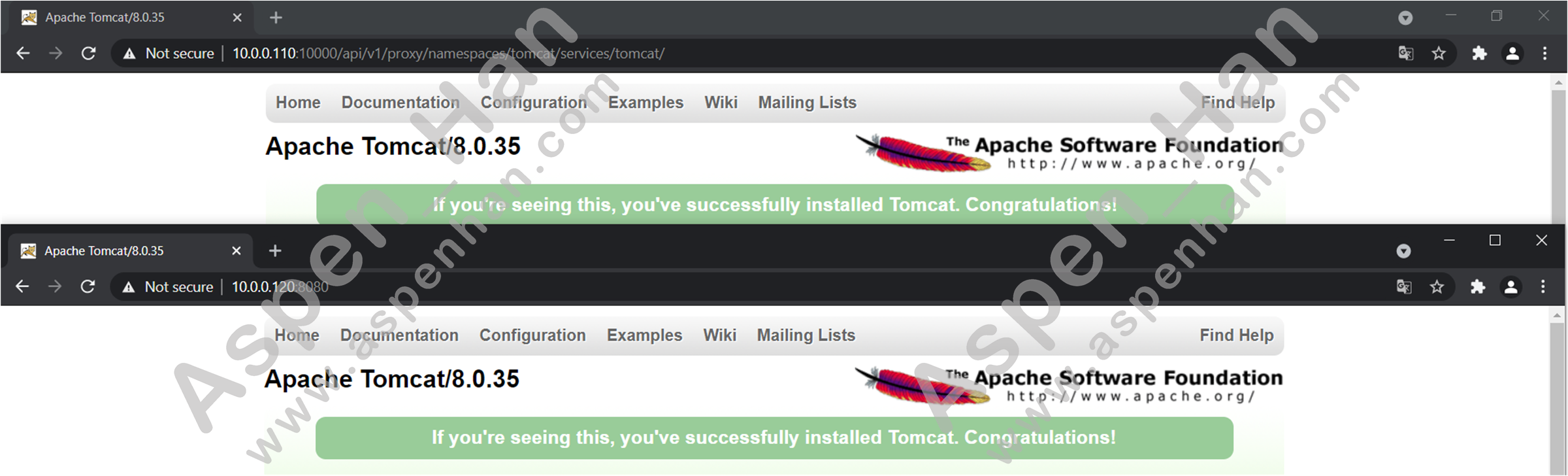

po/kubernetes-dashboard-latest-2686154000-11380 1/1 Running 0 1mstep 4 访问[ http://IP:Port/ui/ ]

IP为kube-apiserver服务所在主机的IP地址,Port为kube-apiserver服务所占用主机端口

|

通过apiServer反向代理访问Service: http://aprServer的IP地址/api/v1/proxy/namespaces/nameSpace名称/services/service名称 附:常用于访问type类型为ClusterIP的svc资源 |